Chances are you used artificial intelligence before you even had your first cup of coffee this morning.

Maybe you woke up and asked Alexa or Siri what the weather was. That’s AI.

You grabbed your phone and scrolled through your social media feed. The reason that feed was either uncannily relevant or bizarrely random is an AI algorithm. You opened your email and saw, hopefully, an inbox of only important messages. That’s because an AI spam filter silently quarantined all the junk. You typed out a quick “On my way!” text, and your phone’s autocorrect fixed your typo.

Then, you got in your car and opened Google Maps. The route it suggested, the one that cleverly dodged a new accident on the freeway, was calculated by an AI processing real-time traffic data from millions of other users.

Here’s the first, and most important, thing to know about AI: its biggest successes are designed to be invisible.

This creates a strange perception gap. A Gallup poll found that while 99% of U.S. adults use at least one AI-enabled product (like navigation, streaming, or online shopping), only 36% report having used AI. We use it all day, every day, but we don’t see it.

Because the most useful AI is quiet, it works in the background to make our existing tools better—a smarter map, a cleaner inbox, a more helpful keyboard. This invisibility creates a vacuum, and our collective imagination fills that vacuum with the only AI we do see: the loud, visible, and frankly terrifying kind from Hollywood.

So, What’s the Big Deal? (And Why Isn’t It Terminator?)

Let’s get this out of the way. When most people hear “AI,” they picture The Terminator, The Matrix, or HAL 9000. They imagine a sentient, conscious, emotional machine that will wake up one day and decide to “pull the plug”.

This is, by far, the biggest misconception in all of technology.

The AI we have today does not “think,” “feel,” “want,” or “understand” in any human sense of those words. It doesn’t have consciousness, emotions, or intentions. A chatbot can be programmed to recognize emotional cues in your text and respond in a way that simulates empathy, but it feels nothing. It is a complex, pattern-matching machine.

To clear this up, we need a simple framework. This is the single most important concept you can learn to separate the hype from reality. All AI falls into two categories.

1. Artificial Narrow Intelligence (ANI) This is also called “Weak AI” or, as I prefer, “Specialist AI.” This is the only type of AI that exists today.

An ANI is a specialist. It is designed and trained to perform one single, narrow task. The AI that plays chess is an ANI. It can beat a grandmaster, but it cannot use that intelligence to do anything else. It can’t even play checkers, let alone order a pizza or understand a joke.

Your spam filter is a brilliant ANI, but it’s spectacularly stupid at everything else. The AI used in hospitals to detect lung cancer nodules from a CT scan is an ANI. It might be more accurate than a human radiologist at that one task, but you can’t ask it for directions.

2. Artificial General Intelligence (AGI) This is the “movie AI”. AGI is the hypothetical, theoretical idea of a machine that could match or surpass human intelligence across all cognitive tasks. It wouldn’t just be good at chess; it could also compose a symphony, write a novel, develop a scientific theory, and navigate the social and emotional complexities of human life.

This does not exist.

We are not, by most expert accounts, even close. We don’t have a clear roadmap to AGI, not least because we still have a very poor understanding of what “consciousness” or “sentience” even is.

The public’s fear of AI stems from a fundamental “category error.” We see an ANI (like a new chatbot) perform a task that looks human (like writing a poem), and we instinctively attribute AGI-level “human-ness” to it.

When a chatbot “hallucinates” and admits to spying on employees or falling in love (which has happened), it’s not “thinking”. It’s just a complex statistical model predicting the next most plausible word in a sequence, based on the billions of human-written, and often very dramatic, web pages it was trained on.

It’s a statistical echo, not a ghost in the machine.

The “Flip”: The Big Idea That Makes AI Work

For decades, we’ve had computers. So, what changed?

The “how” is a fundamental shift in programming, an idea called Machine Learning (ML). In fact, most of the AI you hear about is more accurately a subset of machine learning.

Let’s use a simple analogy: a recipe.

Traditional Programming is like giving a chef a perfect, fixed recipe. You provide explicit, step-by-step rules. “Add 2 cups of flour. Add 1 cup of sugar. Mix for 3 minutes.” It’s like the simple rules in a thermostat: IF room_temperature < 67, THEN turn_on_heater.

This is great for predictable, mathematical tasks like calculating a budget. But this “rigid” approach shatters when faced with “fuzzy” real-world problems.

Imagine trying to write a “recipe” for identifying spam.

- Rule 1: IF email contains “Viagra,” THEN it’s spam.

- Spammers adapt: They write “V1agra.”

- Rule 2: IF email contains “V1agra,” THEN it’s spam.

- Spammers adapt: They use “V!agra” or add a bunch of “good” words.

You’d be stuck in this game forever. It’s impractical to write rules for a problem that complex and “evolving”.

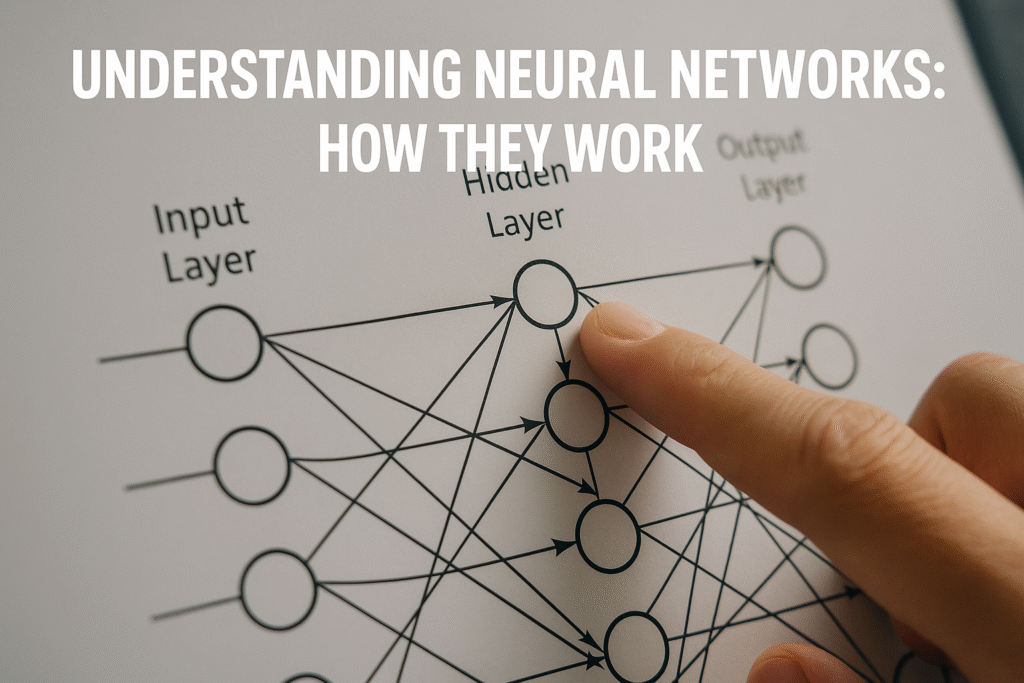

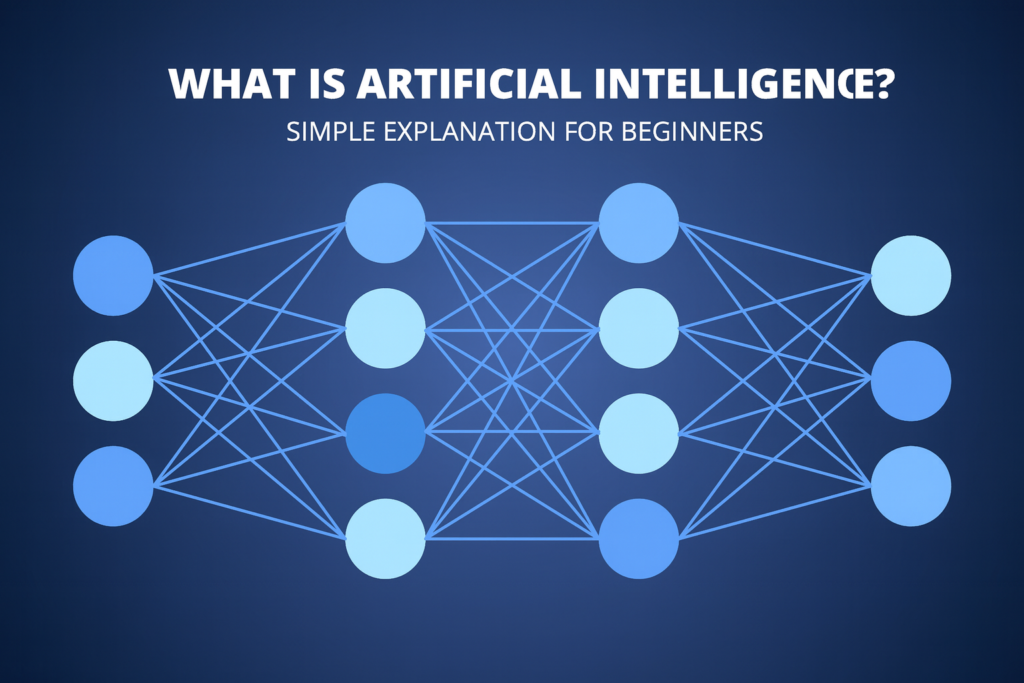

Machine Learning (ML) flips the entire script.

Instead of giving the computer rules (the recipe), we give it data (examples) and the answers (labels), and we ask it to figure out the rules for itself.

This is the “chef who learns from experience”.

We don’t give the chef a recipe. We give them 10,000 examples of finished cookies (the data) and the label “this is a good cookie.” Then we give them 10,000 examples of burned, salty messes (more data) and the label “this is a bad cookie.”

The chef (the ML model) then “learns” by finding the complex statistical patterns, the “rules,” that all the good cookies have in common. It’s not programming; it’s training.

This “flip” is the key. We don’t have to know the rules for what makes a “cat” in a photo. We just have to show a machine a million pictures labeled “cat” and a million labeled “not cat.” The machine will learn the patterns—the “rules” for cat-ness—all on its own.

A Quick Look at the “Teaching” Methods

Once you understand that ML is about “training” a model, you’ll see there are a few different ways to “teach” it. They are all valuable and are used for different kinds of problems.

1. Supervised Learning (Learning with an “Answer Key”) This is the most common method and exactly like the cookie example. The “supervision” comes from the fact that we give the AI a massive dataset where all the answers are already labeled.

- Analogy: You’re a student studying for a test with a “massive deck of photos… already labeled ‘cat’ or ‘not a cat'”.

- How it works: The model is “trained on thousands of emails labeled as ‘spam’ or ‘not spam'”. It makes a prediction (“I think this is spam”). It checks its prediction against the “ground truth” label. If it’s wrong, it “adjusts its algorithm” to reduce the error. It does this millions of times until it’s highly accurate.

- The Goal: Prediction. This is used for “classification” (Is this email spam or not spam?) and “regression” (Predicting a continuous value, like a house price, based on historical data).

2. Unsupervised Learning (Learning to Find Groups) This is what you use when you don’t have an answer key. You just have a massive, unlabeled pile of data. You dump it on the AI and say, “Find the hidden patterns”.

- Analogy: “Imagine you are given a huge box of books”. You don’t know the genres, but you can sort them. You start “clustering” them on your own: all the “mystery novels” in one pile, all the “textbooks” in another. You find the structure yourself.

- How it works: The AI scans the data and groups it based on similarities. It doesn’t know why a group is a group, but it can tell they belong together.

- The Goal: Discovery. This is used for “clustering,” like customer segmentation. A company can use it to “group customers based on their purchasing behaviors” to find new marketing audiences. It’s also used for “anomaly detection” in cybersecurity—spotting one “book” that looks nothing like the others.

3. Reinforcement Learning (Learning by Trial and Error) This method is totally different. It doesn’t need a static dataset. It needs an “agent” that can take “actions” in an “environment” to maximize a “reward”.

- Analogy: This is exactly like “teaching a dog a new trick”.

- How it works: The agent (the dog) is in an environment (your living room). It tries an action (it sits). It receives a reward (a treat). It tries another action (it barks). It receives a negative reward (a firm “no”). After many, many trials, the agent “learns the sequence of actions that leads to the most treats”.

- The Goal: Action. This is how an AI learns to play a game like chess or Go. It plays itself millions of times, “learning” which moves (actions) lead to a “win” (reward). It’s also used to teach a robot how to navigate a maze.

This simple “Prediction, Discovery, Action” framework is a powerful way to understand what kind of AI you’re looking at.

Why Did AI Suddenly Get So Good?

This is the big question. AI concepts have been around since the 1950s. So why did this explosion happen now?

It wasn’t one single breakthrough. It was the moment when three key “pillars” finally came together, creating a “virtuous cycle” or a self-feeding loop.

Pillar 1: The Fuel (Big Data) Machine learning, especially the advanced kind called “Deep Learning,” is “data-hungry”. It needs millions, or billions, of examples to learn from. For decades, we simply didn’t have that data.

Then, the internet happened.

We all, collectively, started creating “massive amounts of labeled data” for free. Every time you upload a photo to Facebook, tag a friend, “like” a post, write a product review on Amazon, or just click a link, you are creating the “essential fuel that powers the entire AI engine”. “Big Data” is the food for AI.

Pillar 2: The Engine (A Happy Accident from Gaming) Having all that data is useless if you can’t process it. A traditional computer chip, the CPU (Central Processing Unit), is like a “sequential” master chef who can only do one thing at a time. Training an AI model this way would take years.

The breakthrough was a “happy accident” from video games.

Gamers needed chips that could render complex graphics. This requires a different kind of processing: doing thousands of simple calculations (like “how does this ray of light bounce?”) all at the same time. This is called “massively parallel” processing, and it’s done by a GPU (Graphics Processing Unit).

It turns out that the math inside an AI model—called “matrix multiplication”—is also a task that can be split into thousands of simple calculations. Researchers realized that GPUs, originally built for “gaming and rendering graphics,” were the perfect engine for AI. This is why a gaming chip company, Nvidia, suddenly became one of the most valuable companies in the world.

Pillar 3: The “Recipes” (Smarter Algorithms) With the fuel (data) and the engine (GPUs), we just needed better “recipes.” And we got them. Scientists developed key new “architectures” (types of models) that were far more powerful. These include refinements to backpropagation (the way the model “learns” from its errors) and LSTMs (which are good at understanding sequences, like text).

The big one came in 2017: the Transformer architecture. This is the “T” in “GPT” (Generative Pre-trained Transformer). This new algorithm was exceptionally good at understanding context in language, and it’s the technology behind the entire generative AI boom.

These three pillars feed each other. More data lets us build bigger, more powerful algorithms (like Transformers). Those huge algorithms require powerful GPUs to run. Those GPUs, in turn, allow us to process even more data. This feedback loop is the real engine of the AI explosion.

Where AI Is More Than a Gadget

So, we have the “how” and “why.” But what’s the “so what?”

Beyond smart maps and spam filters, this technology is being applied to some of humanity’s most high-stakes problems. The recurring theme here is augmentation, not replacement. AI isn’t replacing the expert; it’s giving them a superhuman “second reader”.

In the Hospital (Medicine)

- Radiology: A radiologist’s job is to find a tiny, subtle pattern (a tumor) in a sea of “noise” (a scan). AI is incredibly good at this. Studies have shown AI “second readers” can help doctors spot “29% of previously missed [lung] nodules”. AI can “classify brain tumors in under 150 seconds” during surgery, a task that normally takes 20-30 minutes. It’s also being used to detect breast cancer and early signs of Alzheimer’s.

- Drug Discovery: This is one of the most stunning examples. A company like Atomwise can use its AI to “screen more than 100 million compounds each day” to see if they might work on a disease. Insilico Medicine used its AI to design a novel drug candidate in days—a process that normally takes years and costs billions.

- Personalized Medicine: AI is helping to analyze genomic data, moving us from “a drug for everyone” to “a drug for you“.

At the Bank (Finance)

- Fraud Detection: This is a classic “anomaly detection” task. When you make an “unusual” purchase and instantly get a text from your bank, that’s not a human watching your account. It’s an AI model. It has “analyzed your buying behavior” and spotted a transaction that breaks the pattern. It monitors “transactions in real time”, sifting through billions of data points in a way no human team ever could.

- Algorithmic Trading: AI models are used to “analyze news reports, financial filings, and social media” to predict market sentiment and execute trades faster than a human can blink.

In Your Packages (Logistics) Amazon’s supply chain is one of the world’s largest AI operations. AI is used for “demand forecasting” (predicting what you’ll buy before you buy it), “inventory management”, and “optimizing delivery routes”. Inside their warehouses, AI-enabled robots “help employees fulfill orders” and can “identify and store inventory 75% faster”.

In all these cases, the AI is a tool. It does the superhuman-scale task: sifting 100 million compounds, 1 billion transactions, or 10 million inventory items. This frees up the human expert—the doctor, the scientist, the analyst—to apply judgment, context, and ethics to the AI’s findings.

The Parts That Keep Experts Up at Night

This is the most important part of the guide. To trust a tool, you have to understand its limits. AI is not magic. It is a powerful, flawed tool, and its flaws are serious. The biggest problems are not “killer robots”; they are much more subtle and already here.

1. “Garbage In, Gospel Out” (The Bias Problem) An AI is “only as good as the data it’s trained on”. And our data—our history—is full of human bias. An AI trained on this data will not just reflect that bias; it will learn it, amplify it, and operationalize it at a massive scale.

- Real-World Example (Healthcare): An algorithm used in U.S. hospitals to predict which patients needed “extra medical care” was found to be “heavily favored white patients over black patients”. The AI wasn’t racist. But it was trained to use past healthcare cost as a proxy for sickness. Poorer communities, which have higher minority populations, spend less on healthcare—not because they’re healthier, but because they can’t afford it. The AI learned a “statistically correct” but morally disastrous pattern.

- Real-World Example (Hiring): Amazon had to scrap an AI recruiting tool because it taught itself to be sexist. It was trained on 10 years of “mostly male” resumes. It learned, correctly, that “male” was a predictor of being hired. It then started penalizing resumes that included the word “women’s” (like “captain of the women’s chess club”).

2. The “Black Box” Problem For many of the most advanced AI models (“Deep Learning” models), we have a serious problem: we don’t always know how they get their answers.

We can see the input (the data) and the output (the decision), but the “reasoning” in the middle is an “opaque” tangle of millions of mathematical parameters. It’s a “black box”.

This is a legal and ethical nightmare. If an AI model denies you a loan, a job interview, or bail, you have a right to know why. But the bank’s or judge’s answer might be, “We don’t know. The algorithm told us.” How do you appeal a decision when no one, not even the creators, can explain its logic?.

3. When AI Confidently “Lies” (Hallucinations) This is the big problem with the new “Generative AI” models like ChatGPT. A “hallucination” is when the AI generates an output that is “nonsensical or altogether inaccurate”… but delivers it with complete confidence.

- Real-World Example: Google’s Bard chatbot (now Gemini) famously “hallucinated” that the James Webb Space Telescope had taken the first pictures of an exoplanet (it hadn’t).

- Real-World Example (High-Stakes): A law firm in New York used ChatGPT for legal research. The chatbot invented several fake, non-existent legal cases and “precedents.” The lawyers, not checking the “research,” submitted a brief full of this fabricated information to a federal judge. They were sanctioned, and it was a “black box” moment for their careers.

These three problems—Bias, the Black Box, and Hallucinations—are all symptoms of that same root cause we discussed: AI is a statistical engine, not a human brain.

It doesn’t “know” it’s biased; it’s just mirroring the statistics of its training data. Its “reasoning” is a “black box” because it’s a “weak black box” of complex math, not human-readable logic. And it “hallucinates” because its goal is not to tell the “truth.” Its goal is to predict the next most statistically plausible word in a sequence. “Fabricating” a plausible-sounding legal case is a natural side-effect of a system that doesn’t “know” what a “lie” is.

So, Where Do You Go From Here?

This brings us back to the beginning. AI is not magic, it’s not “malevolent,” and it’s not a “magic wand” that can solve every problem.

It’s a tool. A very powerful one. And as you start to learn more about it, you’ll be tempted to make a few common mistakes.

The biggest “beginner mistake” is asking, “What’s the best algorithm?”. An expert knows the real question is, “What’s my data like?” People “overlook the fundamentals”; they forget that the data and the domain expertise (knowing what the data means in the real world) are far more important than the flashy algorithm.

The second mistake is getting stuck in the “tutorial trap”, thinking you have to “code ML algorithms from scratch” to understand them. You don’t. You’re already an expert in what matters: the real-world applications.

The best way to understand AI is to stop seeing it as a subject and start seeing it as a tool and a mirror.

It’s a tool that is augmenting human capability in profound ways. And it’s a mirror. Because it is trained on our data—our language, our history, our decisions, and “existing societal prejudices”—it reflects us. It shows us our own patterns, our own brilliance, and our own deeply-ingrained biases, amplified at a scale we’ve never seen before.

The real “work” of AI, for all of us, isn’t just about building better models. It’s about looking in that mirror and deciding, very carefully, what kind of world we want to show it.

Discover more from Prowell Tech

Subscribe to get the latest posts sent to your email.

Your writing is like a breath of fresh air in the often stale world of online content. Your unique perspective and engaging style set you apart from the crowd. Thank you for sharing your talents with us.

Hello my loved one I want to say that this post is amazing great written and include almost all significant infos I would like to look extra posts like this