Elon Musk wants Tesla to be seen as “much more than an electric car company.” On Thursday’s Tesla AI Day, the CEO described Tesla as a company with “deep AI activity in hardware on the inference level and on the training level” that can be used down the line for applications beyond self-driving cars, including a humanoid robot that Tesla is apparently building.

Tesla AI Day, which started after a rousing 45 minutes of industrial music pulled straight from “The Matrix” soundtrack, featured a series of Tesla engineers explaining various Tesla tech with the clear goal of recruiting the best and brightest to join Tesla’s vision and AI team and help the company go to autonomy and beyond.

“There’s a tremendous amount of work to make it work and that’s why we need talented people to join and solve the problem,” said Musk.

Like both “Battery Day” and “Autonomy Day,” the event on Thursday was streamed live on Tesla’s YouTube channel. There was a lot of super technical jargon, but here are the top four highlights of the day.

Tesla Bot: A definitely real humanoid robot

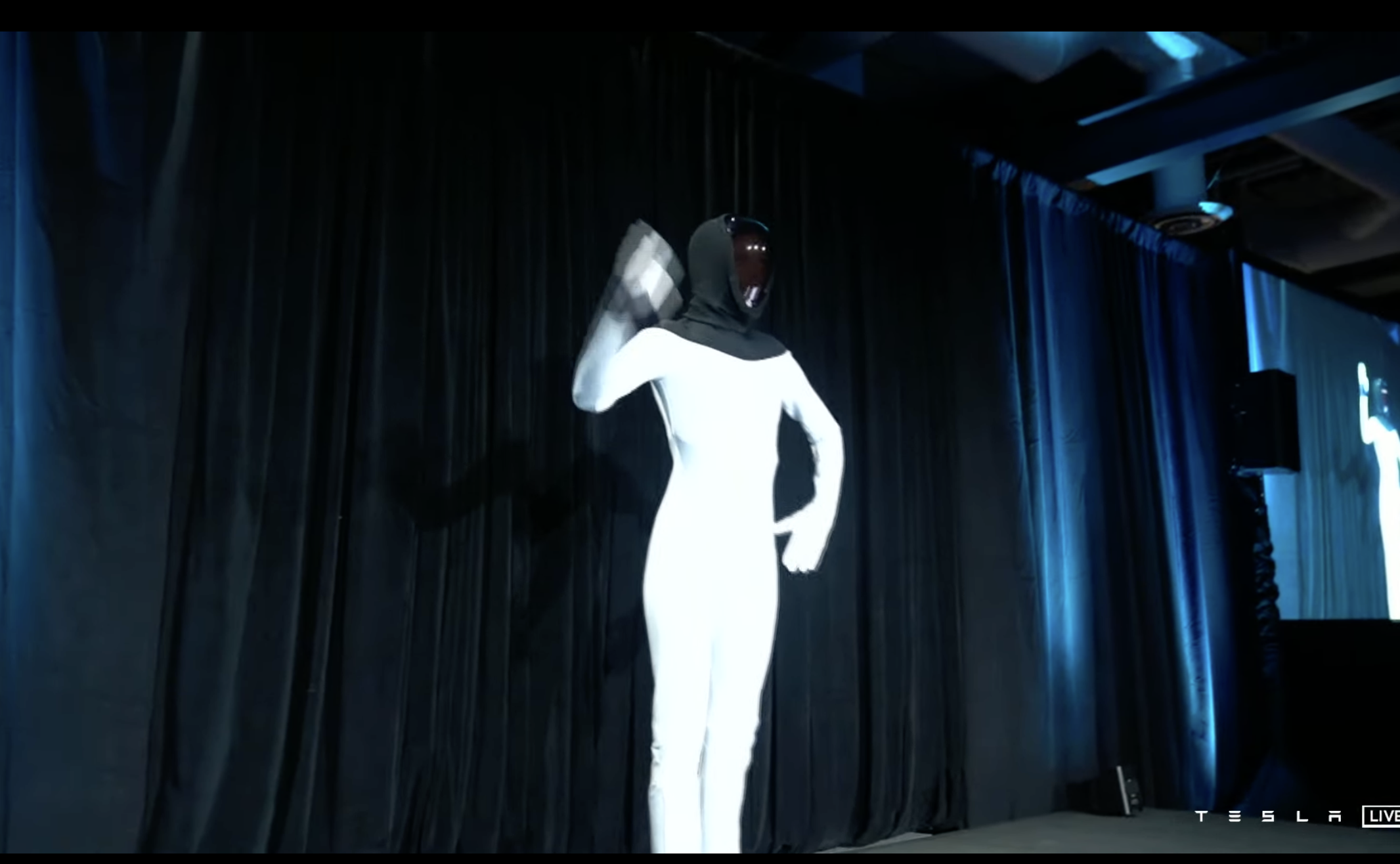

This bit of news was the last update to come out of AI Day before audience questions began, but it’s certainly the most interesting. After the Tesla engineers and executives talked about computer vision, the Dojo supercomputer and the Tesla chip (all of which we’ll get to in a moment), there was a brief interlude where what appeared to be an alien go-go dancer appeared on the stage, dressed in a white body suit with a shiny black mask as a face. Turns out, this wasn’t just a Tesla stunt, but rather an intro to the Tesla Bot, a humanoid robot that Tesla is actually building.

Image Credits: Tesla

When Tesla talks about using its advanced technology in applications outside of cars, we didn’t think he was talking about robot slaves. That’s not an exaggeration. CEO Elon Musk envisions a world in which the human drudgery like grocery shopping, “the work that people least like to do,” can be taken over by humanoid robots like the Tesla Bot. The bot is 5’8″, 125 pounds, can deadlift 150 pounds, walk at 5 miles per hour and has a screen for a head that displays important information.

“It’s intended to be friendly, of course, and navigate a world built for humans,” said Musk. “We’re setting it such that at a mechanical and physical level, you can run away from it and most likely overpower it.”

Because everyone is definitely afraid of getting beat up by a robot that’s truly had enough, right?

The bot, a prototype of which is expected for next year, is being proposed as a non-automotive robotic use case for the company’s work on neural networks and its Dojo advanced supercomputer. Musk did not share whether the Tesla Bot would be able to dance.

Unveiling of the chip to train Dojo

Image Credits: Tesla

Tesla director Ganesh Venkataramanan unveiled Tesla’s computer chip, designed and built entirely in-house, that the company is using to run its supercomputer, Dojo. Much of Tesla’s AI architecture is dependent on Dojo, the neural network training computer that Musk says will be able to process vast amounts of camera imaging data four times faster than other computing systems. The idea is that the Dojo-trained AI software will be pushed out to Tesla customers via over-the-air updates.

The chip that Tesla revealed on Thursday is called “D1,” and it contains a 7 nm technology. Venkataramanan proudly held up the chip that he said has GPU-level compute with CPU connectivity and twice the I/O bandwidth of “the state of the art networking switch chips that are out there today and are supposed to be the gold standards.” He walked through the technicalities of the chip, explaining that Tesla wanted to own as much of its tech stack as possible to avoid any bottlenecks. Tesla introduced a next-gen computer chip last year, produced by Samsung, but it has not quite been able to escape the global chip shortage that has rocked the auto industry for months. To survive the shortage, Musk said during an earnings call this summer that the company had been forced to rewrite some vehicle software after having to substitute in alternate chips.

Aside from limited availability, the overall goal of taking the chip production in-house is to increase bandwidth and decrease latencies for better AI performance.

“We can do compute and data transfers simultaneously, and our custom ISA, which is the instruction set architecture, is fully optimized for machine learning workloads,” said Venkataramanan at AI Day. “This is a pure machine learning machine.”

Venkataramanan also revealed a “training tile” that integrates multiple chips to get higher bandwidth and an incredible computing power of 9 petaflops per tile and 36 terabytes per second of bandwidth. Together, the training tiles compose the Dojo supercomputer.

To Full Self-Driving and beyond

Many of the speakers at the AI Day event noted that Dojo will not just be a tech for Tesla’s “Full Self-Driving” (FSD) system, it’s definitely impressive advanced driver assistance system that’s also definitely not yet fully self-driving or autonomous. The powerful supercomputer is built with multiple aspects, such as the simulation architecture, that the company hopes to expand to be universal and even open up to other automakers and tech companies.

“This is not intended to be just limited to Tesla cars,” said Musk. “Those of you who’ve seen the full self-driving beta can appreciate the rate at which the Tesla neural net is learning to drive. And this is a particular application of AI, but I think there’s more applications down the road that will make sense.”

Musk said Dojo is expected to be operational next year, at which point we can expect talk about how this tech can be applied to many other use cases.

Solving computer vision problems

During AI Day, Tesla backed its vision-based approach to autonomy yet again, an approach that uses neural networks to ideally allow the car to function anywhere on earth via its “Autopilot” system. Tesla’s head of AI, Andrej Karpathy, described Tesla’s architecture as “building an animal from the ground up” that moves around, senses its environment and acts intelligently and autonomously based on what it sees.

Andrej Karpathy, head of AI at Tesla, explaining how Tesla manages data to achieve computer vision-based semi-autonomous driving. Image Credits: Tesla

“So we are building of course all of the mechanical components of the body, the nervous system, which has all the electrical components, and for our purposes, the brain of the autopilot, and specifically for this section the synthetic visual cortex,” he said.

Karpathy illustrated how Tesla’s neural networks have developed over time, and how now, the visual cortex of the car, which is essentially the first part of the car’s “brain” that processes visual information, is designed in tandem with the broader neural network architecture so that information flows into the system more intelligently.

The two main problems that Tesla is working on solving with its computer vision architecture are temporary occlusions (like cars at a busy intersection blocking Autopilot’s view of the road beyond) and signs or markings that appear earlier in the road (like if a sign 100 meters back says the lanes will merge, the computer once upon a time had trouble remembering that by the time it made it to the merge lanes).

To solve for this, Tesla engineers fell back on a spatial recurring network video module, wherein different aspects of the module keep track of different aspects of the road and form a space-based and time-based queue, both of which create a cache of data that the model can refer back to when trying to make predictions about the road.

The company flexed its over 1,000-person manual data labeling team and walked the audience through how Tesla auto-labels certain clips, many of which are pulled from Tesla’s fleet on the road, in order to be able to label at scale. With all of this real-world info, the AI team then uses incredible simulation, creating “a video game with Autopilot as the player.” The simulations help particularly with data that’s difficult to source or label, or if it’s in a closed loop.

Background on Tesla’s FSD

At around minute forty in the waiting room, the dubstep music was joined by a video loop showing Tesla’s FSD system with the hand of a seemingly alert driver just grazing the steering wheel, no doubt a legal requirement for the video after investigations into Tesla’s claims about the capabilities of its definitely not autonomous advanced driver assistance system, Autopilot. The National Highway Transportation and Safety Administration earlier this week said they would open a preliminary investigation into Autopilot following 11 incidents in which a Tesla crashed into parked emergency vehicles.

A few days later, two U.S. Democratic senators called on the Federal Trade Commission to investigate Tesla’s marketing and communication claims around Autopilot and the “Full Self-Driving” capabilities.

Tesla released the beta 9 version of Full Self-Driving to much fanfare in July, rolling out the full suite of features to a few thousand drivers. But if Tesla wants to keep this feature in its cars, it’ll need to get its tech up to a higher standard. That’s where Tesla AI Day comes in.

“We basically want to encourage anyone who is interested in solving real-world AI problems at either the hardware or the software level to join Tesla, or consider joining Tesla,” said Musk.

And with technical nuggets as in-depth as the ones featured on Thursday plus a bumping electronic soundtrack, what red-blooded AI engineer wouldn’t be frothing at the mouth to join the Tesla crew?

You can watch the whole thing here: