I still remember the first time I tried to read a technical paper on artificial intelligence. I stared at the screen, squinting at a diagram that looked less like a computer program and more like a plate of spaghetti thrown against a wall.

It was intimidating. And if you’re reading this, you’ve probably felt that same confusion.

You hear terms like “Deep Learning” and “Backpropagation” tossed around in tech news, usually accompanied by pictures of glowing blue brains or robots shaking hands with humans. But what is actually happening under the hood?

The truth is, neural networks for beginners don’t need to be buried in calculus or complex Python code. At their core, they are just a really smart way of playing “Hot and Cold” with data until the computer guesses the right answer.

If you are a student, a career changer, or just someone who wants to understand the technology powering your Netflix recommendations, this guide is for you. We are going to strip away the math and look at the logic.

The “Biological” Inspiration (It’s Not Actually a Brain)

Before we talk about computers, let’s talk about your head.

The reason we call them “neural” networks is that they are loosely inspired by the human brain. Your brain is made up of billions of neurons. When you see a friend’s face, a specific pattern of these neurons lights up (fires), sending electrical signals down to other neurons, which eventually leads to the thought: “Oh, that’s Sarah.”

Artificial Neural Networks (ANNs) try to mimic this structure, but with code.

Imagine a neural network as a team of detectives solving a mystery. One detective looks at the hair color, another looks at the height, and a third looks at the shoes. They pass their notes to a chief inspector who makes the final decision.

Mini Case Study: The “Hotdog” Identifier

Let’s look at a realistic scenario. Imagine you want to build an app that tells you if a picture contains a hotdog or not (yes, like that scene from Silicon Valley).

The Input: You feed the computer a photo.

The Neurons: The computer breaks the image into pixels.

The Process:

Layer 1 might just look for edges and curves.

Layer 2 sees that those curves form a bun shape.

Layer 3 notices the color red (ketchup) or yellow (mustard).

The Output: The system spits out a probability: “98% chance this is a hotdog.”

The Surprising Insight: The computer doesn’t actually “know” what a hotdog is. It doesn’t understand the concept of food. It simply recognizes that the mathematical pattern of pixels in a hotdog photo is different from the pattern in a pizza photo.

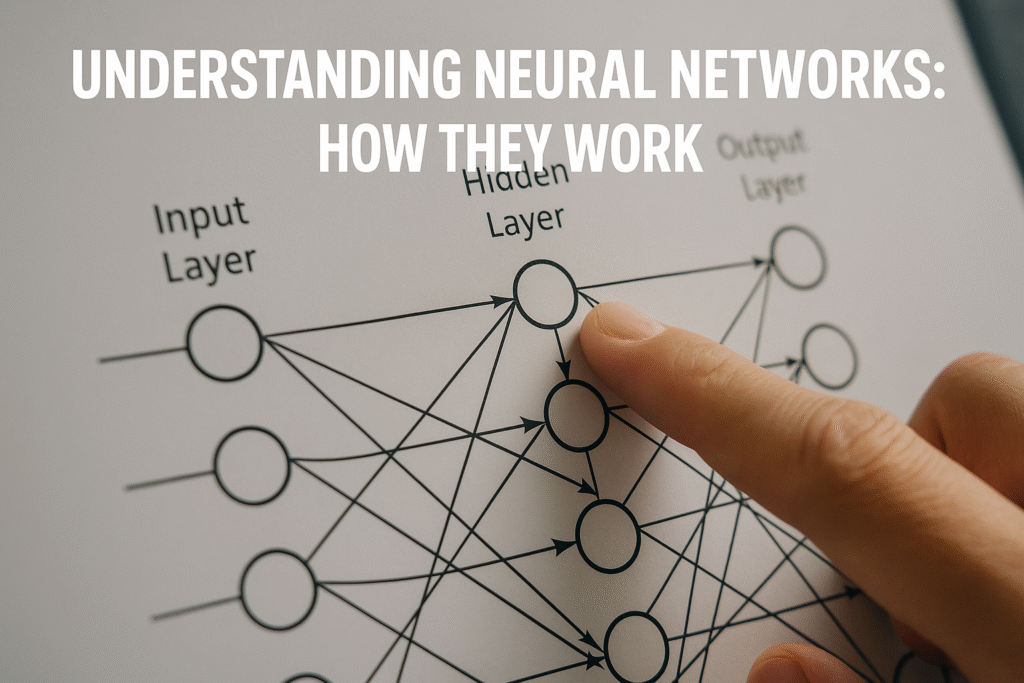

The Architecture: Input, Hidden, and Output Layers

To understand how this works without your eyes glazing over, visualize a sandwich.

The Input Layer (The Top Bread): This is the raw data. It could be the pixels of an image, the words in a sentence, or the numbers in a spreadsheet.

The Hidden Layers (The Meat and Cheese): This is where the magic happens. In a “Deep Learning” network, you might have dozens of these layers. This is where the network crunches the numbers, looks for patterns, and filters information.

The Output Layer (The Bottom Bread): This is the final prediction. “Yes, it’s a cat,” or “The house price is $300,000.”

Common Mistake: Thinking More Layers = Better

A common trap for beginners is assuming that adding more hidden layers always makes the AI smarter. I made this mistake when I first started toying with TensorFlow. I built a massive network to predict simple logical outputs and it failed miserably.

Why? Because it was too complex for the simple problem I gave it. It’s like hiring a team of 50 nuclear physicists to change a lightbulb. They’ll overthink it. For many simple tasks, a smaller network is faster and more accurate.

Weights and Biases: The “Volume Knobs” of AI

This is usually the part where tutorials throw heavy math at you. We aren’t going to do that. Instead, think of a sound mixing board in a recording studio.

Every connection between neurons has a Weight.

Think of the weight as a volume knob. If a specific input is really important (like seeing a tail when trying to identify a cat), the neural network turns that volume knob way up (high weight). If the input is irrelevant (like the background color of the sky), it turns the knob down (low weight).

Then there is the Bias. This is like an offset or a threshold. It allows the neuron to say, “Even if the inputs are low, this feature is so important I’m going to activate anyway.”

How the Machine “Learns” (Training)

Here is the secret: When you first create a neural network, all those volume knobs are set to random positions. The network is essentially stupid.

If you show it a picture of a cat, it might guess “Toaster.”

This is where Training comes in.

Forward Pass: You show the image. The network guesses “Toaster.”

Loss Function: You tell the network, “Wrong. That was a cat.” The network calculates how far off it was.

Backpropagation (The Magic): This is the most famous algorithm in AI. The network goes backward through the layers and adjusts the volume knobs. It says, “Okay, turn the ‘fur’ knob up and the ‘metal’ knob down.”

It repeats this process thousands, sometimes millions of times. Eventually, the knobs are tuned perfectly, and the network can recognize a cat every time.

Note: If you are interested in the programming side of this, check our guide on [Getting Started with Python for AI] to see how these loops are written in code.

The Three Main Types of Neural Networks

Not all networks are built the same. Depending on the job, you need a different tool.

1. Feedforward Neural Networks (FNN)

This is the vanilla version. Information moves in one direction—from input to output. No loops.

Best for: Simple classification, data processing.

Real-world example: A bank deciding if a transaction looks fraudulent based on the amount and location.

2. Convolutional Neural Networks (CNN)

These are the eyes of AI. They are designed specifically to process grid data, like images.

Best for: Image recognition, medical scans, facial detection.

Why they work: They scan images in small chunks (convolutions) rather than trying to digest the whole picture at once.

3. Recurrent Neural Networks (RNN)

These networks have a “memory.” They can remember what happened in the previous step.

Best for: Language translation, speech recognition, predicting stock trends.

Real-world example: When you type a text message and your phone suggests the next word. It remembers the context of the sentence you just wrote.

A Real Scenario: How Streaming Services Know You

Let’s apply this to something you use every day. Have you ever finished a show on a streaming platform, and the very next recommendation is exactly what you wanted to watch?

That isn’t luck. It’s a neural network.

The Scenario: You watch a sci-fi movie from the 1980s. The neural network analyzes your behavior:

Input: Genre (Sci-Fi), Era (80s), Watch time (Completed), Rating (5 stars).

Hidden Layers: The network compares this to millions of other users. It finds patterns: “Users who liked Blade Runner also tended to like Dune.”

Output: It places Dune on your homepage.

The Trap (Overfitting): Sometimes, the recommendation engine gets too obsessed. If you watch one cooking show by accident, suddenly your entire feed is cooking shows. This is called Overfitting—where the network memorizes your recent data too closely and loses the big picture.

Getting Hands-On: A Safe Way to Practice

You don’t need a supercomputer to try this out. In fact, you don’t even need to install software yet.

If you want to see a neural network think in real-time, I highly recommend visiting the TensorFlow Playground. It’s a website run by Google that lets you drag and drop layers and neurons to see how they affect the output.

Actionable Steps for Beginners:

Go to TensorFlow Playground.

Select a dataset: Choose the “Spiral” pattern (it’s the hardest one).

Add layers: Try adding 2 hidden layers with 4 neurons each.

Hit Play: Watch the background change colors as the machine “learns” to separate the blue dots from the orange dots.

It is surprisingly addictive, and it visualizes the “Volume Knob” concept perfectly.

Barriers to Entry (And Why You Should Ignore Them)

A lot of people ask me, “Do I need a PhD to work with this stuff?”

The answer is a definitive no.

While research scientists at OpenAI or DeepMind need advanced mathematics, applying neural networks is becoming a trade skill, like web development.

Tools that make it easy:

Keras: A Python library that acts like a wrapper. It lets you build a neural network in about 10 lines of code. It lets you build a neural network in about 10 lines of code. Once you grasp the basics here, try building your first simple neural network with Keras

AutoML: Platforms that build the network for you. You just provide the data.

Surprising Tip: The “Black Box” Problem

Here is something experienced engineers worry about that beginners often miss. We often don’t know why a neural network makes a specific decision.

Because the “knowledge” is stored in millions of numerical weights (those volume knobs), you can’t just open a file and read the logic. This is called the “Black Box” problem. If a bank’s AI denies your loan, the bank might not be able to tell you exactly which variable caused the denial.

This is why Explainable AI (XAI) is becoming a huge field—trying to translate those numbers back into human logic.

Why Neural Networks Sometimes Fail

It is important to manage expectations. Neural networks are powerful, but they are brittle.

Garbage In, Garbage Out: If you train a network on bad data, you get bad results. If you train a face-recognition system only on photos of men, it will fail when it sees a woman.

Adversarial Attacks: Researchers have found that placing a specifically printed sticker on a stop sign can trick a self-driving car’s neural network into thinking it’s a “Speed Limit 45” sign.

Safety Warning: As you explore AI, remember that these systems are statistical guessers, not truth-tellers. Never rely blindly on an AI model for critical medical or financial decisions without verifying the source.

If you are interested in how data quality affects results, you might find our article on [Data Cleaning Best Practices] helpful.

What’s Next?

Neural networks are no longer just science fiction. They are sorting your spam folder, unlocking your phone with your face, and helping doctors identify diseases earlier than ever before.

For the beginner, the path forward is clear:

Grasp the concept (inputs, weights, outputs).

Play with visual tools like TensorFlow Playground.

Learn a little Python if you want to build your own.

Don’t let the math scare you away. At the end of the day, it’s just a machine trying to find a pattern. And once you see the pattern, you can’t unsee it.

Editor — The editorial team at Prowell Tech. We research, test, and fact-check each guide to ensure accuracy and provide helpful, educational content. Our goal is to make tech topics understandable for everyone, from beginners to advanced users.

Disclaimer: This article is educational and informational. We’re not responsible for issues that arise from following these steps. For critical issues, please contact official support (Google, Apple, Microsoft, etc.).

Last Updated: December 2025

Discover more from Prowell Tech

Subscribe to get the latest posts sent to your email.