You can’t open a news app or scroll through social media these days without seeing someone mentioning AI. Whether it’s a student using ChatGPT to draft an essay or a coder using Copilot to fix a bug, this technology has firmly parked itself in our daily lives.

But if you strip away the hype and the sci-fi movie references, what is actually happening under the hood?

If you are looking for Large Language Models explained in plain English, you’re in the right place. I remember the first time I interacted with a modern AI chatbot. I asked it to write a poem about my dog, a chaotic Golden Retriever named Barnaby. It didn’t just rhyme; it captured the essence of a dog that eats socks. It felt like magic.

But it wasn’t magic. It was math—really impressive statistics wrapped in a friendly interface.

Let’s break down exactly what these models are, how they learn to talk like humans, and why they sometimes make things up.

The “Super-Powered Autocomplete” Analogy

To understand an LLM (Large Language Model), look at your smartphone. Open a text message and type: “I am on my…”

Your phone probably suggests “way.”

This is a basic language model. It looks at the words you just typed and guesses the most likely next word based on your previous texting habits.

Now, imagine that instead of just training on your text messages, we trained that system on:

- Every public book ever written.

- Most of the open internet (Wikipedia, Reddit, news sites).

- Academic papers, code repositories, and movie scripts.

And then, we gave it a supercomputer to process all that data.

That is essentially what an LLM is. It is autocomplete on steroids. It isn’t “thinking” in the way you or I do; it is calculating probabilities. When you ask it a question, it isn’t looking up a database of facts like Google Search. It is predicting, word by word (or token by token), what should come next in the sentence to satisfy your prompt.

Key Insight: This is why AI can be so creative but also so wrong. It cares more about the pattern of the answer looking correct than the facts being true.

Large Language Models Explained: The “Next Word” Trick

If you want to sound smart at a dinner party, you can tell people that LLMs are “probabilistic engines.” But for us, let’s stick to the library analogy.

Imagine a librarian who has read every book in existence. However, this librarian doesn’t understand the meaning of the stories. They just have a photographic memory of which words tend to appear near other words.

If you ask, “What is the capital of France?” the librarian doesn’t visualize the Eiffel Tower. They just know that in millions of documents, the word “Paris” follows the phrase “Capital of France” 99.9% of the time.

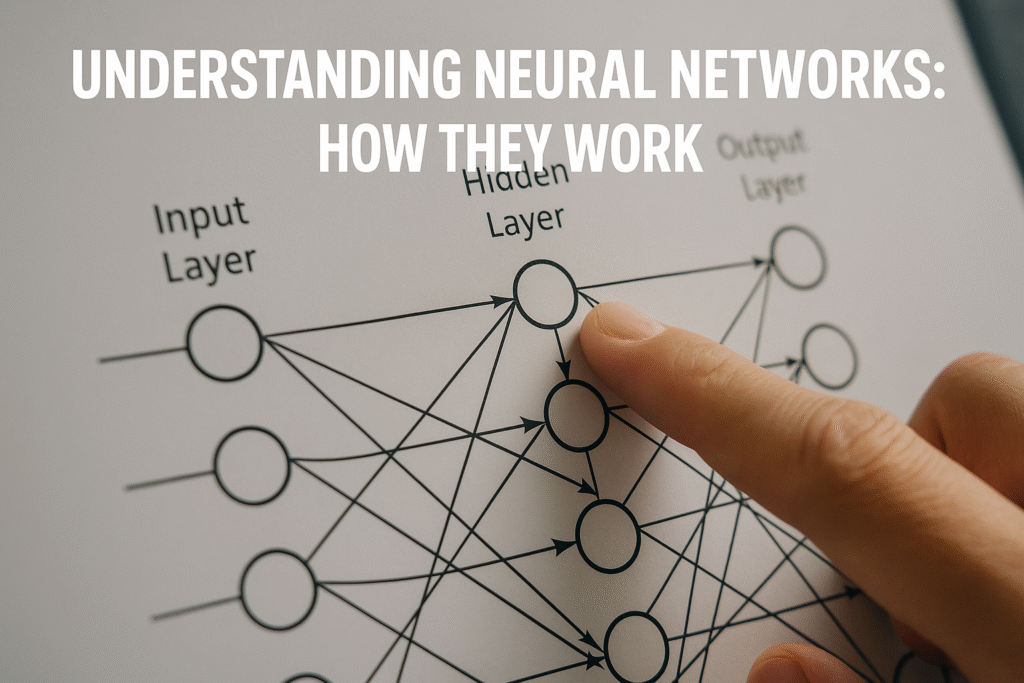

The Training Process (How They Learn)

Training an AI is like teaching a child to speak, but by force-feeding them the entire contents of a public library.

- Pre-training: The model is fed massive amounts of text. It plays a game of “hide and seek” with words. The computer hides a word in a sentence and asks the model to guess it. If the model guesses wrong, it corrects itself. It does this billions of times until it understands grammar, slang, facts, and reasoning patterns.

- Fine-tuning: This is where humans step in. A raw model might be rude, racist, or just weird. Humans review the model’s answers and rate them: “Good answer” or “Bad answer.” This steers the model to be helpful and polite.

Real-World Scenario: I once tried to use an older, raw AI model for a recipe. I asked for “spicy pasta,” and it started reciting code for a website about pasta. Why? Because it had seen pasta recipes and website code on the internet, and without fine-tuning, it didn’t know which one I wanted. Modern chatbots (like Gemini or ChatGPT) have undergone “Instruction Tuning” so they know you want a recipe, not HTML code.

Why “Parameters” Matter (And What They Are)

You might hear tech companies bragging about their model having “70 billion parameters” or “1 trillion parameters.”

Think of parameters like the knobs and dials on a massive sound mixing board.

- A small model has a few million knobs. It can write a simple sentence, but it might get confused easily.

- A massive model (like GPT-4 or Gemini Ultra) has hundreds of billions of knobs.

Each “knob” represents a connection between pieces of data. The more parameters a model has, the more nuance, complex reasoning, and obscure facts it can handle.

Surprising Fact: Bigger isn’t always better for you. If you just need an AI to summarize an email, a massive model is overkill—like using a Ferrari to drive to the mailbox. Smaller models are faster, cheaper, and often just as good for simple tasks.

The Hallucination Problem: When AI Lies to You

This is the most critical section for beginners. Because LLMs are prediction engines, not truth engines, they suffer from “hallucinations.”

A hallucination happens when the model predicts a sequence of words that looks plausible but is factually entirely wrong.

A Common Mistake People Make: A law student recently made headlines for using an AI to write a legal brief. The AI invented court cases—names, dates, and rulings—that sounded 100% real but never happened. The student submitted the brief to a judge, and it did not end well.

Why did this happen? The AI knew the structure of a legal citation. It knew that “v.” usually goes between two names. It just filled in the blanks with random names that fit the pattern.

Quick Checklist: When to Trust an LLM

- Creative Writing: High Trust. (It can’t “lie” about a fictional story).

- Summarizing Text You Paste In: High Trust. (It is working with facts you provided).

- Historical Facts/Dates: Medium Trust. (Verify with Google).

- Medical/Legal/Financial Advice: Zero Trust. (Always consult a professional).

If you are interested in better ways to verify information, check out our guide on [How to Search Google Effectively] to cross-reference AI claims.

Practical Examples: How to Use LLMs Today

So, knowing all this, how do you actually get value out of these tools without falling into traps? Here are two scenarios.

Scenario A: The Email Assistant (The Right Way)

You need to send a polite decline to a wedding invitation.

- Prompt: “Write a polite email declining a wedding invitation because I will be out of the country. Make it warm and ask if they have a registry where I can send a gift.”

- Result: The LLM excels here. It understands social norms and tone perfectly.

- Why it works: There is no “fact” to get wrong. It is purely language manipulation.

Scenario B: The Research Buddy (The Wrong Way)

You ask: “Who is the CEO of [Obscure Small Company] and what is their email?”

- Result: The AI might give you a name from 2019, or invent a name entirely because it looks like a CEO name.

- Why it fails: The model’s training data has a cut-off date (it doesn’t know what happened today unless it’s connected to live search), and it might hallucinate private info.

Actionable Tip: If you are using a chatbot that has access to the web (like Copilot or Gemini), explicitly ask it to “Search the web for the latest info.” This forces it to look up current data rather than relying on its internal memory.

Are They “Smart”?

This is a philosophical debate, but practically speaking: sort of.

They don’t have feelings, consciousness, or desires. They don’t “want” to help you; they are mathematically compelled to complete the pattern you started.

However, they display “emergent behaviors.” When you pile enough data and parameters together, the model starts being able to do things it wasn’t explicitly taught, like translating languages it rarely saw or solving logic puzzles.

I like to think of them as incredibly well-read parrots. They can repeat brilliant things and solve complex problems based on what they’ve seen before, but they don’t truly “understand” the world.

Getting Started: Your First Steps

If you haven’t dived in yet, don’t be intimidated. You don’t need to be a programmer. You just need to be able to type (or speak).

- Pick a Platform: ChatGPT (OpenAI), Gemini (Google), and Claude (Anthropic) are the big three. Most have free tiers.

- Start Simple: Ask it to explain a concept you already know well. This helps you see its strengths and weaknesses.

- Iterate: If the first answer is boring, tell it! “That was too formal. Rewrite it to sound like a pirate.” (Seriously, try it. It’s fun).

Large Language Models are powerful tools, but they are just that—tools. They aren’t replacing human creativity; they are augmenting it. Just remember to fact-check the important stuff, and you’ll be ahead of 90% of users.

For more on getting the most out of your tech, you might like our article on [Essential Privacy Settings for Your Smartphone].

Editor — The editorial team at Prowell Tech. We research, test, and fact-check each guide to ensure accuracy and provide helpful, educational content. Our goal is to make tech topics understandable for everyone, from beginners to advanced users.

Disclaimer: This article is educational and informational. We’re not responsible for issues that arise from following these steps. For critical issues, please contact official support (Google, Apple, Microsoft, etc.).

Last Updated: December 2025

Discover more from Prowell Tech

Subscribe to get the latest posts sent to your email.