Contents

- 1 Are ChatGPT, Grok, and Gemini Ruining Social Media? The Increasing Amount of Synthetic Content

- 1.1 The Evolution of “AI Slop,” And A New Equation For Social Media Ecosystems

- 1.2 Identifying the different types of Synthetic Content Wave

- 1.3 How AI Algorithms Make the Problem Even Worse

- 1.4 The Feedback Loop Problem

- 1.5 An Increasingly Synthetic World and the Search for Authenticity

- 1.6 The Dilemma of Personalization vs Authenticity

- 1.7 The Corporate Response: Are the Platforms Doing Enough?

- 1.8 All the Good Stuff: AI and Social Media

- 1.9 Content Recommendations Based on Preferences

- 1.10 Improved Search Results

- 1.11 Language Translation

- 1.12 Spam and Malware Detection

- 1.13 How Social Media May Migrate in an AI-Filled Future

- 1.14 Scenario 1: The Great Authentication Disruption

- 1.15 Scenario 2: The Collapse of Trust

- 1.16 Scenario 3: The Adaptive Evolution

- 2 Conclusion: Not Destroyed, but Changed Forever

Are ChatGPT, Grok, and Gemini Ruining Social Media? The Increasing Amount of Synthetic Content

The digital world of 2025 seems more and more artificial. Scroll through any social media app, and you’ll come across a deluge of AI-generated material — from surreal images and deepfaked videos to boilerplate text that comes close to passing for human writing. What started as a trickle of novelty content has become a torrent that’s changing how we relate online in significant ways. With AI tools like ChatGPT, Grok and Gemini growing in accessibility and power, we’re being compelled to face an uncomfortable question: Is artificial intelligence ruining social media?

The Evolution of “AI Slop,” And A New Equation For Social Media Ecosystems

A new term you may have seen is “AI slop,” which describes the low-quality, AI-generated content overwhelming our feeds. This material is not produced to inform, entertain or engage — it is designed to provoke engagement, game algorithms and sell attention. The number of posts from unknown accounts, which are heavily promoted by the company’s algorithms, rose sharply from 8 percent of total posts in 2021 to 24 percent in 2023, according to research by scientists at Stanford and Georgetown University, underscoring how quickly the world of A.I.-generated content has taken hold of the feeds that us all.

Is AI Ruining Social Media

Identifying the different types of Synthetic Content Wave

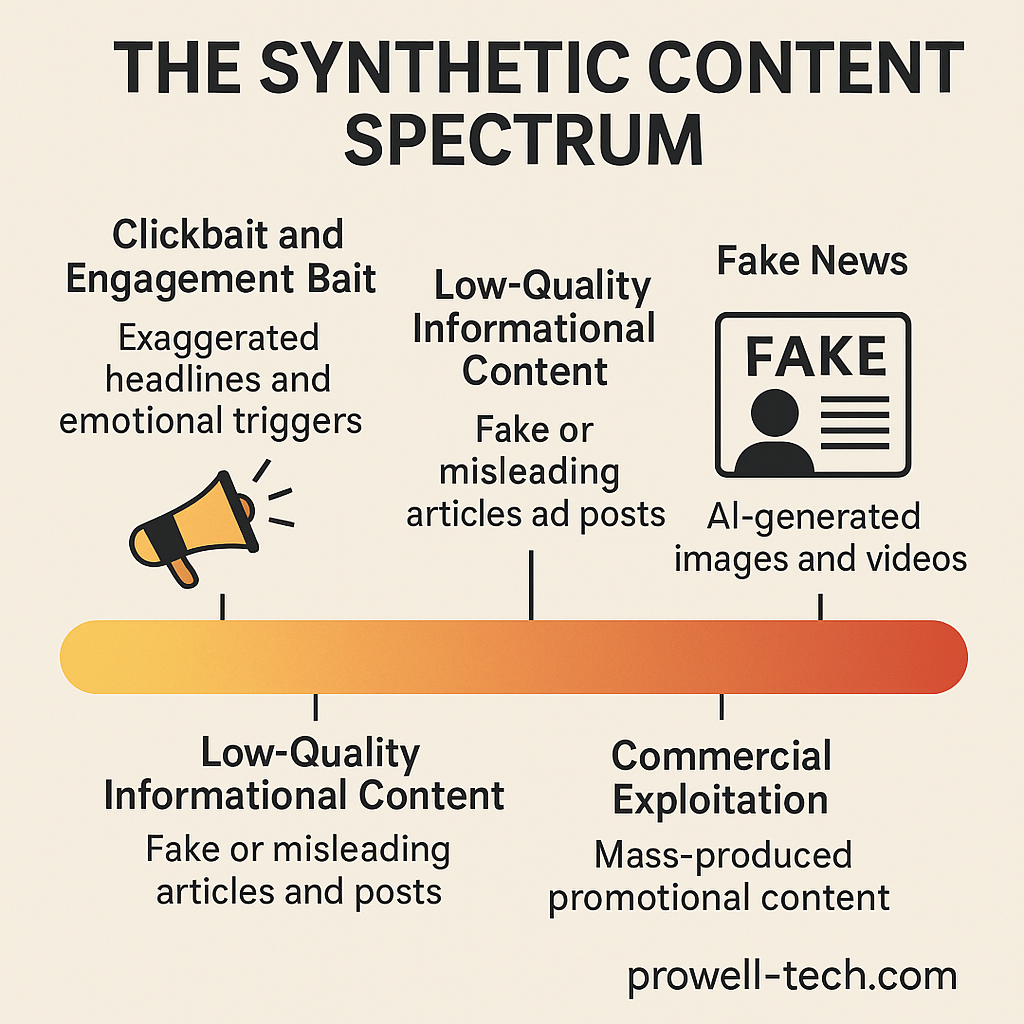

Types of AI-Generated Content on Social Media AI-generated content could be grouped into broad categories on social media as follows:

Social media [allows] clickbait and engagement bait: Plow tons of click-fishing content, specially crafted to circumvent social media algorithms and traffic to monetizing schemas. These posts usually showcase exaggerated headlines, fake stories about stars, and other notices meant to elicit emotional reactions and shares.

Examples of General Low-Quality Informational Content: Fake articles, posts, and comments generated by AI, which seem informative, but the information they contain is incorrect, vague, and/or misleading. In a crisis, such as a natural disaster, this content can impede the spread of accurate information.

Fake News: Deepfakes, AI-generated images and videos that can be so close to accurate content These include everything from benign celebrity mash-ups to threatening political manipulation and misinformation.

Commercial Exploitation Commercialization of AI by brands and marketers, who use the tools to churn out cheap content to populate the online world, without necesary disclaimers or best practices

According to the World Economic Forum, misinformation and disinformation—increasingly targeted by generative AI—pose the biggest threat to nations, corporations, and people over the next two years. This isn’t simply annoying content; it is an important change in our information ecosystem.

How AI Algorithms Make the Problem Even Worse

The problem isn’t just that AI tools are capable of generating content — it’s that the algorithms of social media platforms are actively promoting it. AI-enabled recommendation systems are engineered for scale — to maximize engagement, not bring about truthfulness or quality.

“AI algorithms have the ability to monitor user behavior and preferences that enables them to find tailored content and recommendations. This makes it easier for people to discover the new and interesting; it connects them with people and businesses they are likely most interested in,” one source in the industry explains. Although this seems like good news, the catch is that these very algorithms tend to amplify sensationalist or misleading information, because it leads to more engagement.

Social media algorithms analyze user behavior and rank content using interactive measurements like likes, comments, shares and time spent watching. This process serves as an amplifier where the more engagement the post, the more visibility it gets, leading to viral trends. This makes for a veritable playground for AI-generated content, specifically content by which its creators have used emotional triggers.

The Feedback Loop Problem

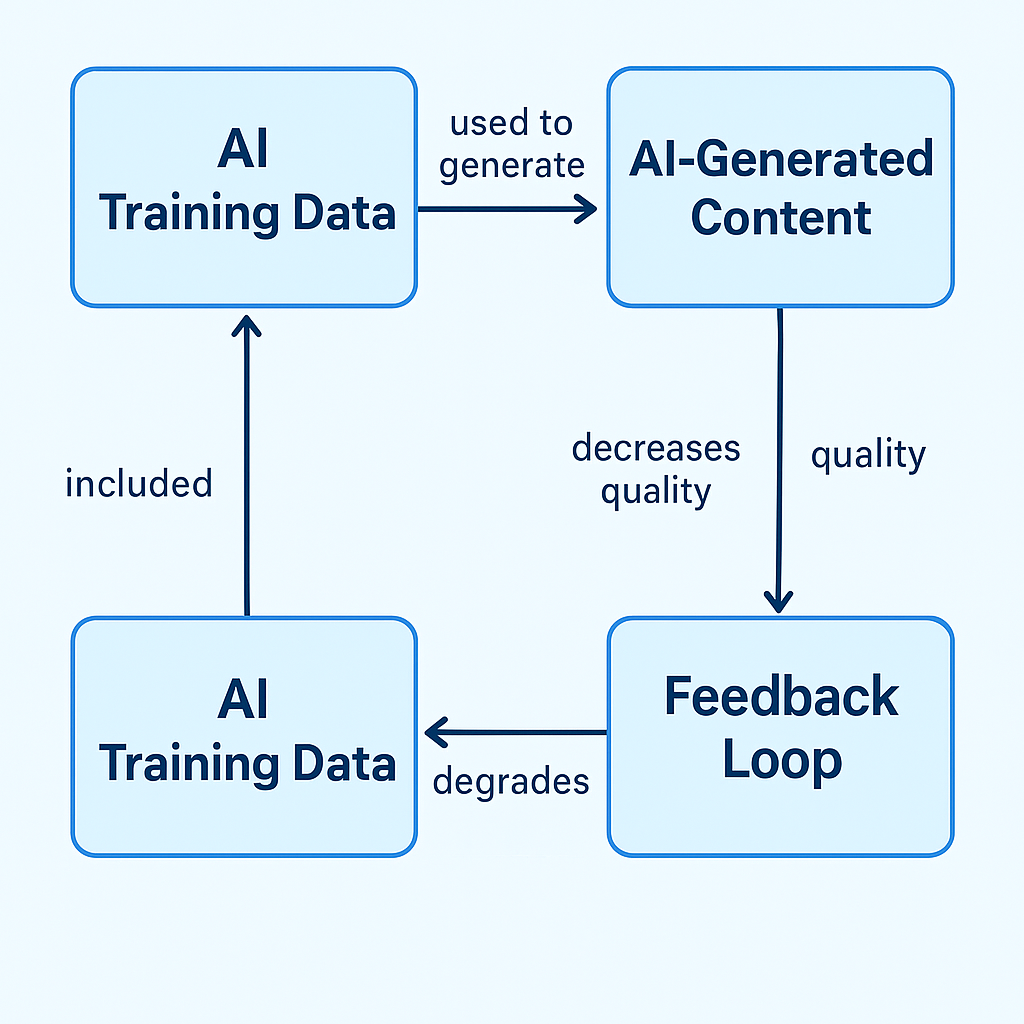

One especially worrisome problem is what experts refer to as “recursive training.” This happens as AI systems are trained on datasets increasingly filled with material that’s also AI-generated. It creates a feedback loop by which outputs become more and more homogenized, increasing the chance of producing errors and biases, further degrading quality on the web.

Governance and Technology Philosophy and Ethics The Deepfake Dilemma and Trust Erosion

One of the biggest threats of AI on social media is deepfakes—extremely realistic fake videos, images, and audio that can be virtually indistinguishable from the real thing.

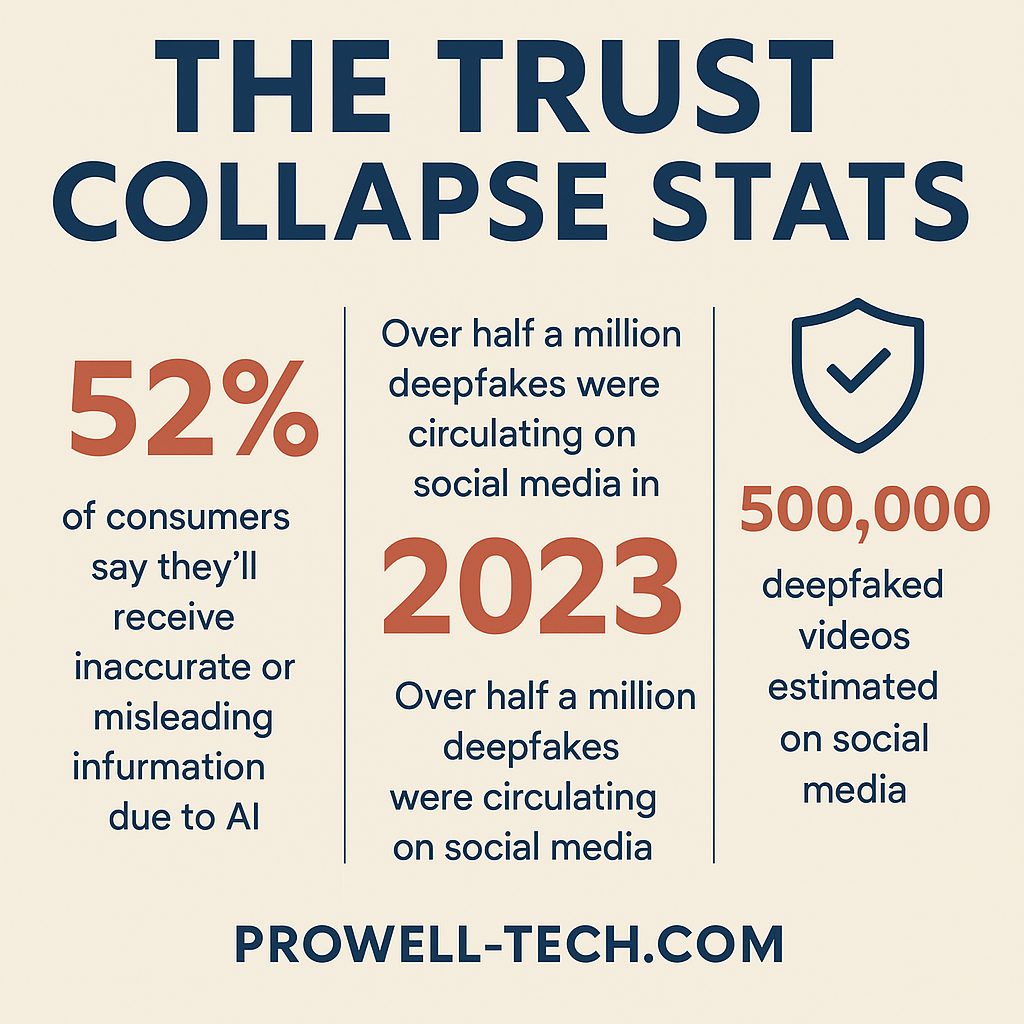

Researchers estimated that over half a million deepfaked videos were circulating on social media in 2023. These aren’t just fun celebrity face swaps; they’re being used to manipulate politics, harass and spread misinformation increasingly. For instance, thousands of Democratic-registered voters in New Hampshire received fraudulent calls encouraging them not to vote. The voice, which claimed to be President Joe Biden, informed recipients that the state primary that was moving forward would be an easy win, so they should “save” their votes for future elections.

In Bangladesh, deepfake videos of two female opposition politicians in swimming costumes sparked public outrage in a country where modest dress has traditionally been the standard for women. These instances demonstrate that AI-created content can have real-world effects beyond annoyance.

An Increasingly Synthetic World and the Search for Authenticity

With the rise of AI-generated content, users are starting to wonder what they can trust. According to Adobe’s State of Digital Customer Experience research, about 52% of consumers say that because of artificial intelligence, they’ll receive inaccurate or misleading information. This loss of trust has serious implications for social media platforms, which depend on users and trust to work.

“AI content is great, but it’s not real, and authentic,” reads one Reddit post about social media’s future amid the spread of AI. This sentiment echoes suspicions that as AI becomes increasingly capable, nothing will seem real anymore, prompting the mass abandonment of social media platforms.

The Dilemma of Personalization vs Authenticity

In a way, social media platforms are stuck in a Catch-22: AI allows for unprecedented levels of personalization — a major selling point for these platforms — but that same technology threatens the authenticity that users crave in the end.

“AI can analyze user behavior and preferences to suggest content which is more likely to engage them in mind,” one industry source says. That sounds great in theory, but together with the tsunami of other AI-generated content, it contributes to a landscape where users could increasingly be served engineered content, if not content that even feels a little alive.

The Corporate Response: Are the Platforms Doing Enough?

Social media platforms have taken action against AI-generated content and begun scheduling policies to attempt to address its worst manifestations.

Meta — the owner of Facebook and Instagram — uses a combination of technology and human-based solutions. An algorithm scans uploaded content, and those identified as AI-generated are pinned with an “AI Info” tag warning users that the content may not be as it seems. Other platforms are adopting similar labeling systems.

But critics say those measures fall short. Technological advancements in producing fake content are outpacing detection methods, leading to an ongoing competition between content creators and platform monitors. Additionally, users can share AI-generated content without labels by downloading and re-uploading, bypassing platform safeguards.

All the Good Stuff: AI and Social Media

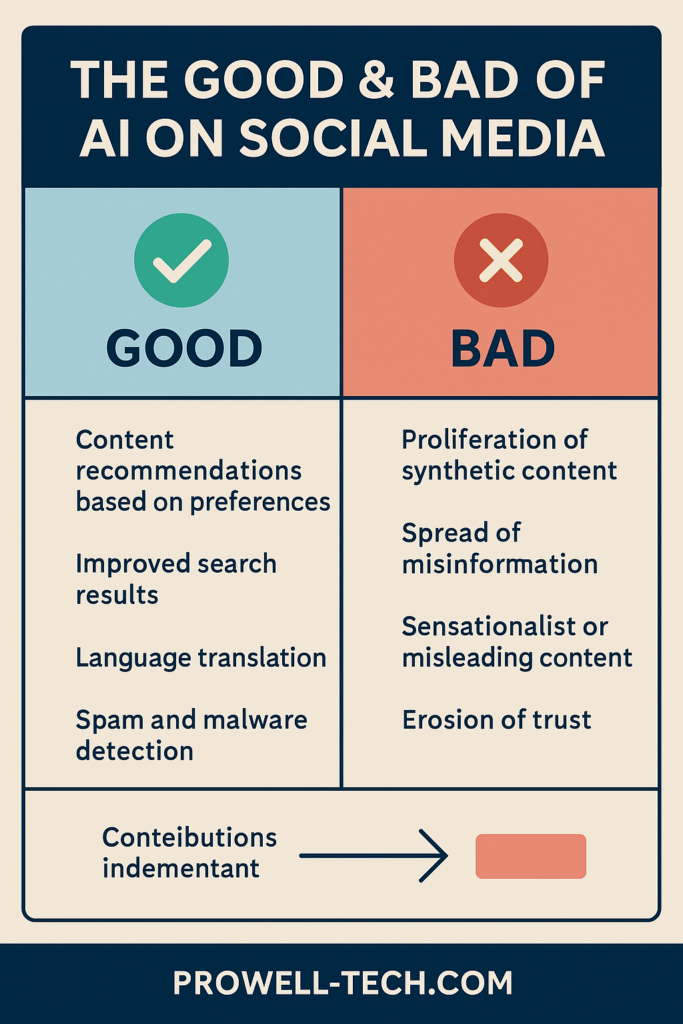

Even with the caveats, AI itself isn’t bad for social media. Thoughtfully implemented, AI can improve the social media experience in a number of ways:

Content Recommendations Based on Preferences

AI can also analyse user behaviour and preferences to suggest the content the user would most interest in. It will assist users in exploring fresh and intriguing content while saving time by avoiding irrelevant material.

Improved Search Results

The use of AI can enhance the accuracy and relevancy of search results, allowing users to find the information they need more quickly.

Language Translation

AI can read all languages in real-time, which aids users in seamlessly connecting with others globally.

Spam and Malware Detection

Using AI on social media platforms, you can detect spam and malware and remove those from the platform.

Other AIs are also being created to fight the negative effects of AI in social media. For instance, the Content Authenticity Initiative (CAI) is creating open standards that assist in identifying the original sources for content to build tamper-proof provenance, and now they have 3,000 members worldwide.

How Social Media May Migrate in an AI-Filled Future

Looking ahead, there are a number of possible ways social media might evolve to deal with the tide of AI content:

Scenario 1: The Great Authentication Disruption

One potentially good thing emerging from the mess is what some reviewers are dubbing “the authentication revolution.” “AI taking over and ruining social media platforms like Instagram, X, Twitter is a good thing,” as one YouTube commentator puts it. By this, rampant deep_fake misinformation will lead people to distrust everything being reported on social media while Ai_influencers will destroy our parasocial relationship with creators making everyone yearning for human, natural, real content”

In which case, it is possible that the very proliferation of AI content could create a watering down effect that makes us actually appreciate verified, authentic human content even more. Platforms can implement strong verification systems that validate real people creating things, and end-users can migrate towards smaller, tighter-knit communities where verification may be easier to establish.

Scenario 2: The Collapse of Trust

An even graver possibility is that of “the trust collapse.” If an unrestrained flow of AI-generated content continues to swamp social media services, users may learn to distrust all content they encounter online. This, however, could precipitate a total backlash against social media as a source of information or mutual connection, who knows — there might be significant upheaval of these platforms and the substantive digital economy.

Scenario 3: The Adaptive Evolution

The most realistic scenario may be a kind of adaptive evolution, in which both platforms and users develop new norms, tools and literacies for dealing with an A.I.-saturated landscape. This might involve:

New forms of media-literacy education that empower users to recognize AI-generated content.

Solutions at the platform level that improve the labeling and filtration of synthetic content

New social standards for the proper use of AI in content creation.

Technical standards that encode content provenance information in media files.

Conclusion: Not Destroyed, but Changed Forever

Is AI ruining social media? What’s clear is that while AI tools like ChatGPT and Grok and Gemini are indeed changing social media in profound ways, “ruining” is probably too simplistic of a characterization. What is obvious is that we are in a very large-scale transition period where the very definition of engagement, trust and authenticity is being rewritten.

The challenges are real, and they are big. AI-generated content is pummeling our feeds, undermining trust, toxicizing information and perhaps even rewiring how we define truth itself. But there are also opportunities for good—more tailored experiences, improved content moderation, and perhaps even a newfound appreciation for real-life human interaction.

One thing is clear, social media won’t go back to how it was before the AI. The tools are too powerful, the economic incentives too powerful and the capabilities too compelling. Instead, we need to work together towards an era where AI deepens rather than damages the value of social media: one that demands deliberately built platforms and an educated locality of users — and most of all — a unified commitment to maintain the human core of our online relationships.

The question is not whether AI will transform social media — it already has. The challenge is how to balance the use of these tools in a way that promotes meaningful communication and community, and whether we can mobilize these powerful technologies in the service of humanity around connection, information and participation. It is also for all of us who use, shape and give meaning to our common social spaces, not only the technologists who build these systems.