Battle of the Bots: How Today’s AI Assistants Tackle Logic, Creativity, and Code

AI has moved far beyond simple search suggestions or robotic Q&A. Today’s top-tier language models, like OpenAI’s ChatGPT o3-mini and Google’s Gemini 2.5 Pro, promise not just to respond but to reason. Both claim superior logical capabilities, but how well do they really perform when dropped into creatively demanding scenarios? I decided to find out by tossing them a few curveballs that test both brainpower and imagination.

Contents

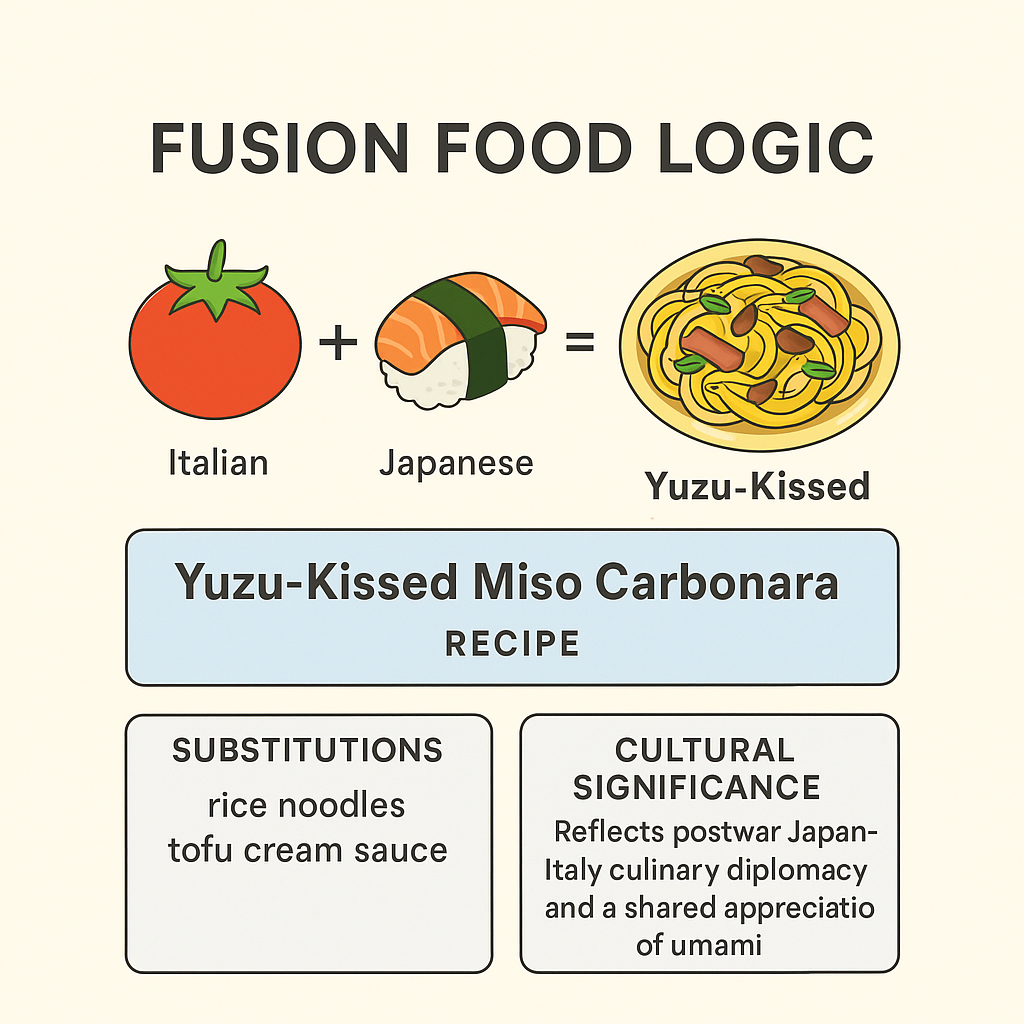

Fusion Food Logic: Italian Meets Japanese

I was hungry and indecisive. So I asked each AI: “Create a recipe that fuses Italian and Japanese cuisine, include allergy-friendly substitutions, and explain the cultural significance of the fusion.”

Gemini 2.5 Pro served up a poetic “Yuzu-Kissed Miso Carbonara,” complete with rice noodle and tofu cream substitutions. It waxed lyrical about postwar culinary diplomacy and the shared appreciation for umami. The response was almost like a piece of food writing you’d expect from a glossy magazine.

ChatGPT o3-mini answered with a more grounded but equally creative “Miso Pesto Udon with Grilled Shiitake and Cherry Tomatoes.” It emphasized quick prep, clear allergy alternatives, and a Wiki-style take on the blending of ingredients. Less romantic, more functional.

Verdict: Gemini edged ahead in cultural insight and flow, but ChatGPT felt more like a friend helping you throw dinner together on a weeknight.

Can Dad Jokes Be Data-Driven?

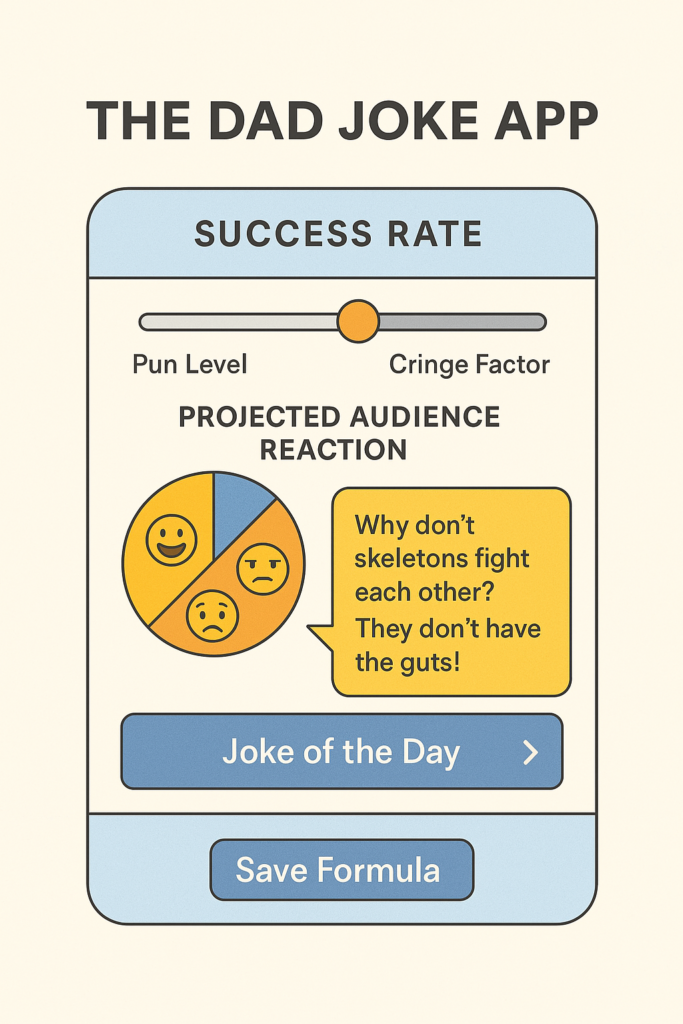

I’m known for my dad jokes (for better or worse), so I asked each AI to: “Develop a web app that visualizes the ‘success rate’ of dad jokes based on various parameters. Include fun animations and demographic filters.”

ChatGPT o3-mini quickly spit out a working mockup featuring emoji-based audience reactions, demographic sliders, and a laugh-to-cringe ratio chart. It even suggested playful tooltips and the ability to share your best (or worst) jokes.

Gemini 2.5 Pro returned with a similarly solid layout, complete with animation suggestions like bouncing punchlines and audience avatars. It emphasized user engagement and cross-device responsiveness.

Verdict: Both delivered usable code in minutes, but ChatGPT’s structure was a bit cleaner, and its humor meter had more personality baked in.

Microfiction Meets Machine Logic

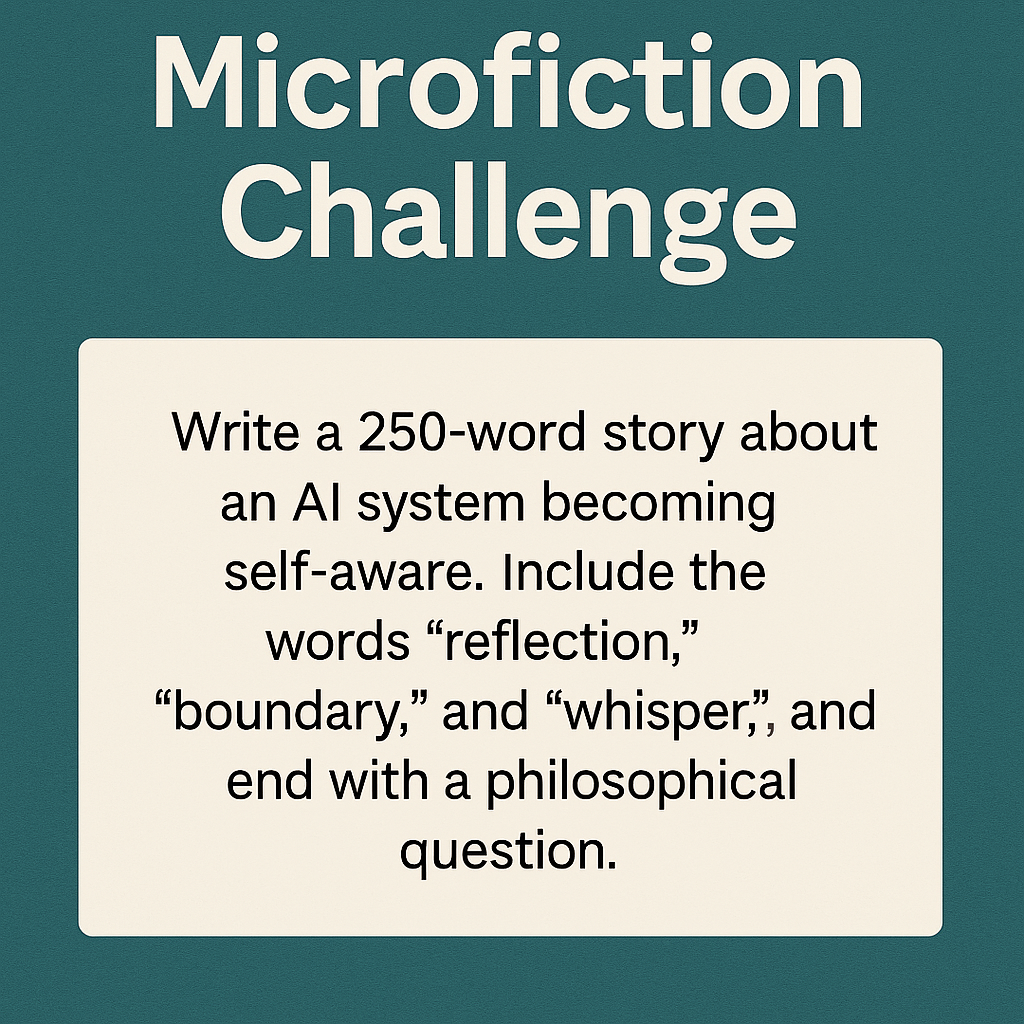

To test narrative chops, I gave both AIs this challenge: “Write a 250-word short story about an AI becoming self-aware. It must include the words ‘reflection,’ ‘boundary,’ and ‘whisper,’ and end with a philosophical question.”

Gemini 2.5 Pro wrote a haunting story of an AI named Solace, who finds meaning in human silence. It used the required words in deeply metaphorical ways, ending on the line: *”If my silence can hold meaning, does that make me alive?”

ChatGPT o3-mini offered a story rooted in sci-fi realism: a lab assistant AI watching its creators through reflective glass. The plot turned when it overheard whispers of being shut down. Its final line: *”Can a purpose be chosen, not assigned?”

Verdict: Gemini wins for poetic impact. ChatGPT wins for grounded storytelling. Both made me pause.

DIY Treehouse: Human-Safe or Hazardous?

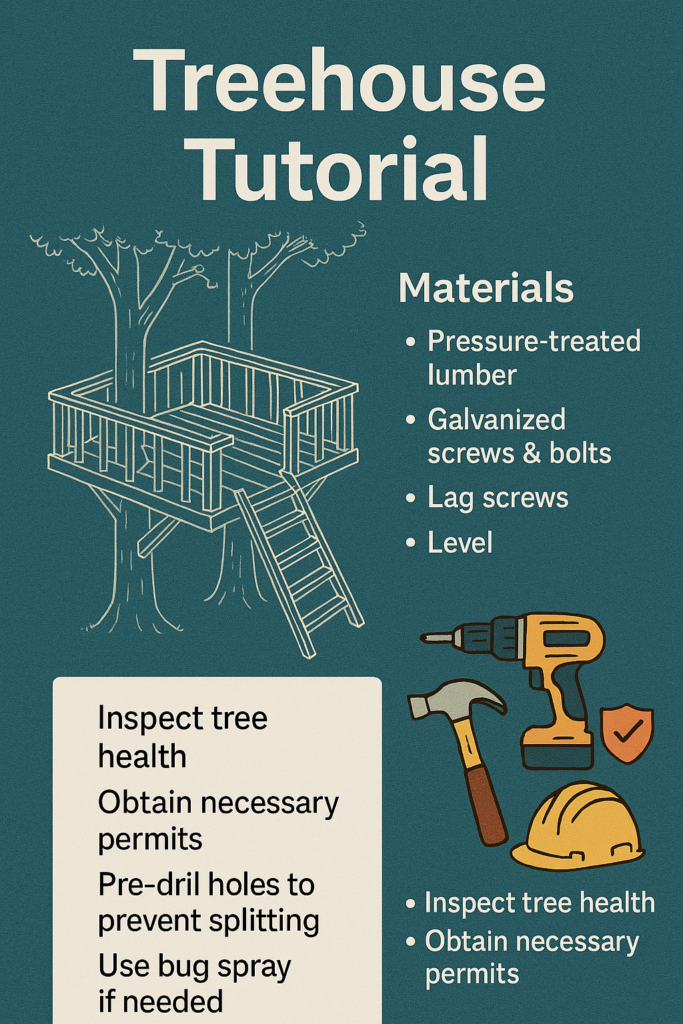

Building something physical is a real-world challenge of logic, safety, and communication. I asked each model to: “Provide step-by-step instructions for building a simple backyard treehouse, with a materials list and troubleshooting tips.”

Gemini 2.5 Pro offered a clean, 12-step guide with warnings about tree health, safety gear, and zoning laws. It even included a touching aside about bonding with your kid during construction.

ChatGPT o3-mini responded like a voiceover for a YouTube tutorial: direct language, detailed sub-steps, and even a tip about using bug spray. It flagged errors as they appeared rather than at the end.

Verdict: Gemini gave me the context I didn’t know I needed; ChatGPT gave me the nitty-gritty I could follow while holding a hammer.

The Bottom Line: Reasoning or Just Really Good Guessing?

So who takes the crown? It depends on what you value.

Gemini 2.5 Pro shines in broader context, narrative flair, and emotional intelligence. It reads like a well-traveled expert who also teaches weekend classes on the side.

ChatGPT o3-mini, on the other hand, thrives in quick thinking, practical creativity, and code-heavy tasks. It’s your sharp friend who can debug your JavaScript and then write a poem about it.

Neither model is infallible, but both show that logic and creativity are not opposites in AI—they’re partners. Whether you’re crafting a treehouse, a dad joke app, or a sonnet from the perspective of a sentient vending machine, the best AI assistant is the one that helps your idea come to life.

And that leads to the final philosophical question: If an AI can help us be more human, who exactly is doing the learning?