You can’t escape it.

It feels like overnight, every meeting, every marketing email, and every piece of software suddenly revolves around “AI.” One minute, we were all just trying to remember our passwords, and the next, we’re being told a “Generative AI” is going to revolutionize how we draft an email.

It’s overwhelming. And if you’re like most people, you’re probably just nodding along, trying to absorb the vocabulary through context clues.

I’ve spent the last few years neck-deep in this stuff, first as a skeptic, then as a tinkerer, and now as someone who has to separate the practical tools from the hype. The jargon is the biggest barrier to entry. It’s designed to make you feel like you’re already behind.

You’re not.

Most of these terms are just fancy labels for concepts that are simpler than they sound. You don’t need a computer science degree to understand what’s happening, but you do need to grasp a few key ideas so you can spot the fluff and focus on what actually matters to your job or your life.

Let’s clear the air on the terms that are actually causing all this noise.

That ‘Generative’ Word Everyone Is Using

Let’s start with the big one: Generative AI (or GenAI).

This is the umbrella term for the technology that has everyone spooked… and excited. It’s what powers tools like ChatGPT, Claude, Midjourney, and all the others.

The key word is “generative.” It generates (creates) new content.

This is the big shift. For decades, AI was mostly analytical. It was great at spotting patterns. A spam filter “knows” what a phishing email looks like. A Netflix algorithm “knows” you like documentaries about dysfunctional families. It analyzes existing data.

Generative AI makes stuff up.

It creates new text, images, code, or music based on the patterns it learned from a massive dataset. You give it a prompt, and it generates a response that is statistically likely to be what you want. It’s a creation engine, not just an analysis engine. That’s the whole revolution in a nutshell.

The Engine Behind the Magic: LLMs

So, what is the thing doing the generating? You’ll hear the term Large Language Model, or LLM.

This is the “brain,” or more accurately, the engine.

Think of an LLM as the most sophisticated auto-complete you’ve ever seen. It has been trained on a truly staggering amount of text from the internet—we’re talking billions of articles, books, and websites. Through this training, it hasn’t “learned” what a dog is in the way a human does. It hasn’t petted one or smelled one.

It has just learned, through mind-boggling math, which words are most likely to follow other words in any given context.

When you ask ChatGPT, “What’s the capital of France?” it doesn’t “know” the answer. It calculates that the most statistically probable sequence of words to follow that question is “The capital of France is Paris.”

This is also why it’s so good at mimicking tone. It “knows” that a legal brief uses words like “heretofore” and “whereas,” and that a casual blog post uses “I think” and “you’ll find.” It’s just a hyper-advanced pattern-matcher. That’s the LLM.

The ‘Garbage In, Garbage Out’ of the AI Age

This is where you, the human, come in. You interact with an LLM using a Prompt.

A prompt is simply your instruction. It’s the question you ask or the command you give. But the quality of that instruction is suddenly one of the most valuable skills emerging in the modern workplace.

This has given rise to the (slightly over-hyped) term Prompt Engineering.

This just means “getting good at asking the AI for what you actually want.”

This is the number one mistake I see people make. They treat the AI like a simple search engine. They type in “marketing ideas” and get back a list of boring, generic junk. Then they complain the tool is useless.

The tool isn’t (just) useless; the prompt was bad. It was low-effort.

A “prompt engineer”—or really, just a good user—provides context, constraints, tone, and a specific goal.

- Bad Prompt: “Write a blog post about time management.”

- Good Prompt: “Act as a productivity expert who used to be a chronic procrastinator. Write a 500-word blog post for a leadership-focused audience. The topic is ‘The Myth of Multitasking.’ Use a conversational, slightly skeptical tone. Include one actionable tip that isn’t the Pomodoro Technique.”

See the difference? The first is a request for information. The second is a set of creative directions. Prompting is a skill, and it’s the new barrier between getting generic fluff and a genuinely useful starting point.

The Part Where the AI Confidently Lies to You

This is, without a doubt, my favorite term: Hallucination.

It’s a strange word, isn’t it? It implies the AI is seeing things that aren’t there, which is metaphorically true but technically misleading.

A hallucination is when an AI generates a response that is plausible-sounding, confident, and… completely false.

It might invent a historical event, create a fake quote, or, in one infamous (and very real) case, cite half a dozen legal precedents that do not exist. A lawyer actually used these in a real court filing and was, to put it mildly, severely sanctioned.

Why does this happen? Remember, the LLM’s job is not to tell the truth. Its job is to predict the next most likely word. Sometimes, the most “likely-sounding” path of words leads it down a rabbit hole of pure fiction. It doesn’t know it’s “lying” any more than your phone’s auto-complete “knows” what you’re trying to say. It’s just completing a pattern.

This is the single most important concept for any user to understand. You must assume everything an AI tells you is a “first draft” that needs to be fact-checked by a human. It is a creative partner, not an oracle.

Wait, What’s Machine Learning vs. Deep Learning?

These terms have been around for a while, but GenAI has brought them back to the forefront. People use them interchangeably, but they’re not the same.

It’s simple:

- Machine Learning (ML): This is the big, broad category. It’s the entire science of getting computers to learn from data without being explicitly programmed. Your spam filter is ML. Your Netflix recommendations are ML. It’s a “classic” AI that’s great at classification and prediction based on data you feed it.

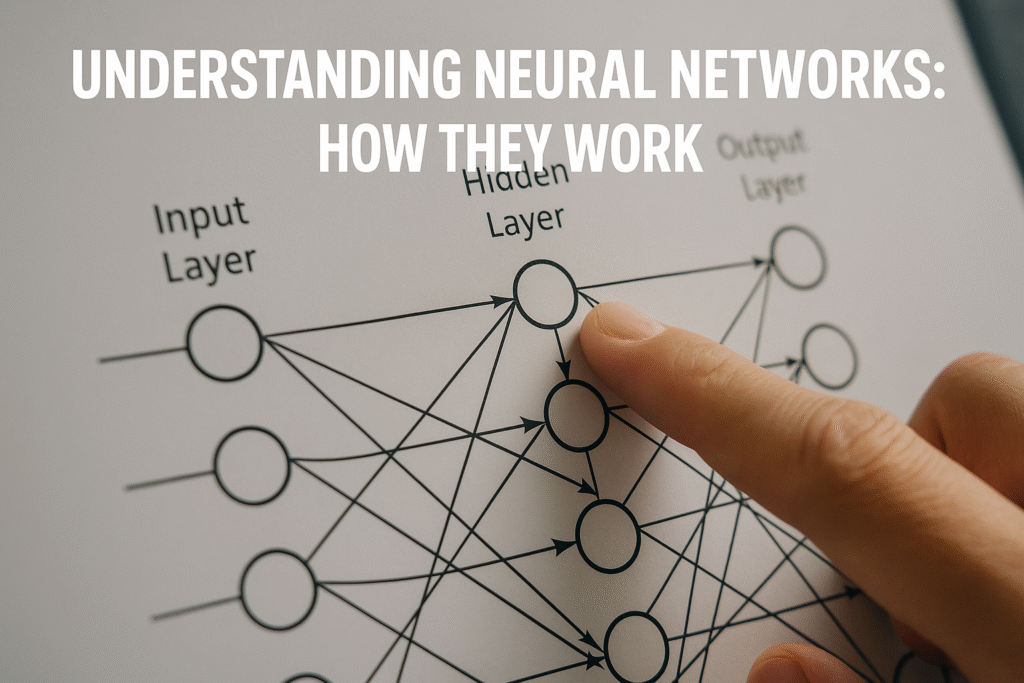

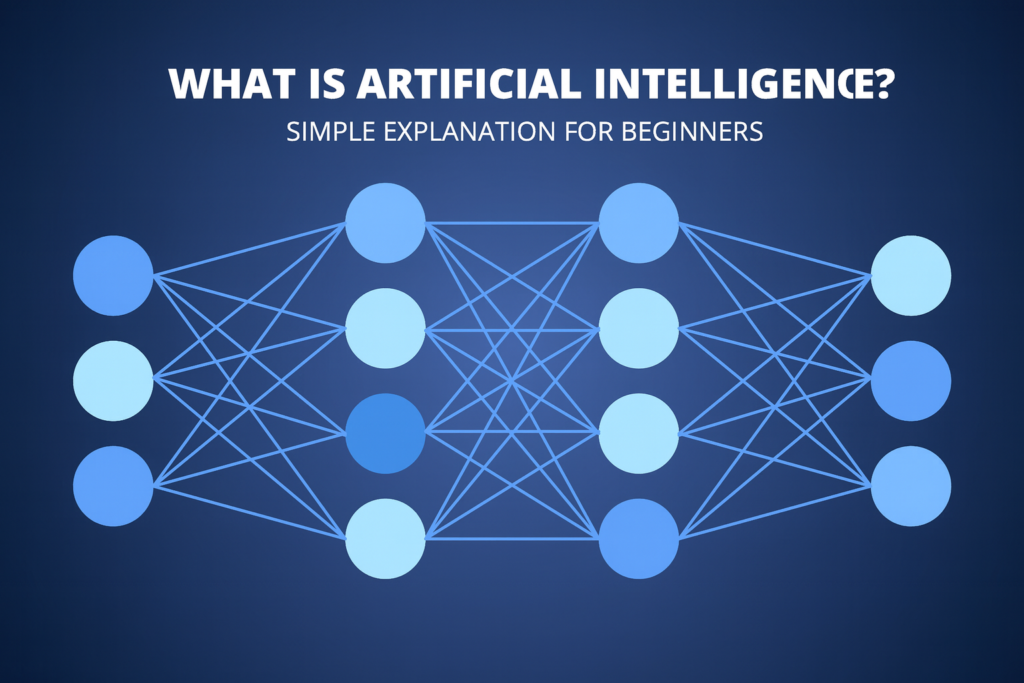

- Deep Learning (DL): This is a specific type of advanced Machine Learning. It’s the “deep” part that powers the really complex stuff, like GenAI and self-driving cars.

The “deep” refers to Neural Networks, which are computing systems loosely inspired by the layered structure of the human brain. Deep Learning is just ML that uses these very large, very complex, multi-layered neural networks. All Deep Learning is Machine Learning, but not all Machine Learning is Deep Learning.

Frankly, for 2025, all you really need to know is that Deep Learning is the powerhouse technique that made these new, powerful LLMs possible.

The AI’s “Brain Food” and Its Biggest Flaw

So, how does an LLM “learn”? From Training Data.

This is the massive dataset—again, basically a huge chunk of the internet, books, and articles—that the model was fed during its development.

This concept is critical because it introduces the AI’s most profound and dangerous problem: Bias.

The AI is not objective. It can’t be. It is a direct reflection of the data it was trained on. And the internet… well, it’s not exactly a bastion of perfect, unbiased, and equitable human thought.

If the training data contains historical biases against certain groups of people (and it does), the AI will learn and replicate those biases. If the data over-represents one viewpoint, the AI will adopt that viewpoint as the “default.”

This is why you’ll see image generators associate “doctor” with men and “nurse” with women, or why early models would produce racist or sexist responses. The companies building these models spend a ton of time and money trying to filter out this bias, but it’s an incredibly hard problem.

The key insight: An AI is not a source of objective truth. It’s a mirror reflecting the data we fed it, warts and all.

The New Terms You’ll Hear in Office Meetings

Okay, so you’ve got the basics. Now let’s cover the “next level” terms that are separating the casual users from the people actually implementing this stuff in their business.

1. Fine-Tuning

You’ll hear someone say, “We aren’t using the public model; we’re fine-tuning our own.”

Fine-Tuning is the process of taking a big, general-purpose model (like GPT-4) and training it a little bit more on a smaller, specific dataset.

Imagine the base LLM is a college graduate who knows a lot about everything. Fine-tuning is like sending that graduate to law school. You’re giving it specialized training on a specific subject. You could fine-tune a model on all of your company’s customer support chats. The result? An AI that “speaks” in your brand’s voice and “knows” your common problems inside and out. It’s how you make a generalist into a specialist.

2. RAG (Retrieval-Augmented Generation)

This one is everywhere right now, and it’s probably more important than fine-tuning for most businesses.

Let’s say you want an AI to answer questions about your company’s 2024 product catalog. The problem? The public model (like ChatGPT) was probably trained on data from 2023. It doesn’t know your new products.

You have two options:

- Fine-Tuning: The “heavy” option. (See above).

- RAG: The “smart” option.

RAG is a clever workaround. Instead of re-training the AI, you just give it a “cheat sheet” at the exact moment you ask the question.

When you ask, “What’s the price of the new X-1000 model?” the RAG system first does a quick search of your product catalog (the “retrieval” part). It finds the relevant page, grabs that text, and then pastes it into a hidden, secret part of your prompt.

It essentially tells the AI: “Hey, using only the following document [pastes catalog page here], answer the user’s question: ‘What’s the price of the new X-1000 model?'”

The AI then “augments” its generation using the data you provided. This is how all those “chat with your PDF” tools work. It’s faster, cheaper, and more accurate than fine-tuning for tasks that require real-time, specific knowledge.

3. Multimodality

This is the big new thing. Multimodal AI just means the AI can understand and generate more than one “mode” of information at a time.

Until recently, models were separate. ChatGPT did text. Midjourney did images. A multimodal AI (like Google’s Gemini or OpenAI’s GPT-4o) does it all in one.

You can give it a picture of your fridge’s contents and ask, “What can I make for dinner?” It sees the image, understands the text of your question, and generates a text recipe in response. You can talk to it, and it can talk back. It combines text, images, and audio seamlessly. This is the direction everything is heading.

The Big One Everyone Worries About

Finally, you have to know the difference between what we have now and what Hollywood sells us.

- ANI (Artificial Narrow Intelligence): This is all AI that exists today. Every single one. It is “narrow” because it is designed and trained to perform one specific, narrow task. An AI can beat a grandmaster at chess, but it can’t (and doesn’t know how to) make a cup of coffee. ChatGPT can write a poem, but it has no physical body, no senses, and no actual understanding of the world.

- AGI (Artificial General Intelligence): This is the hypothetical, science-fiction AI. AGI is a machine that would have the ability to understand, learn, and apply its intelligence to solve any problem, at a human level or beyond. It would have consciousness, self-awareness, and the ability to transfer learning from one domain to another.

We are not close to AGI. Anyone who tells you AGI is “just around the corner” is either selling you something or has watched too many movies. The challenges between ANI and AGI are astronomically vast.

So, when you hear “AI,” people are always, always talking about ANI.

You don’t need to be a data scientist to navigate this new world. You just need to be a good skeptic and a clear communicator. This technology is a powerful tool, but it’s just that—a tool. And now, you know what the most important parts of it are actually called.

Discover more from Prowell Tech

Subscribe to get the latest posts sent to your email.

Excellent breakdown, I like it, nice article. I completely agree with the challenges you described. For our projects we started using Listandsell.us and experts for our service, Americas top classified growing site, well can i ask zou a question regarding zour article?

Your blog is a constant source of inspiration for me. Your passion for your subject matter is palpable, and it’s clear that you pour your heart and soul into every post. Keep up the incredible work!

Your writing has a way of resonating with me on a deep level. I appreciate the honesty and authenticity you bring to every post. Thank you for sharing your journey with us.

I just could not depart your web site prior to suggesting that I really loved the usual info an individual supply in your visitors Is gonna be back regularly to check up on new posts

you are in reality a just right webmaster The site loading velocity is incredible It seems that you are doing any unique trick In addition The contents are masterwork you have performed a wonderful task on this topic

I have been browsing online more than three hours today yet I never found any interesting article like yours It is pretty worth enough for me In my view if all website owners and bloggers made good content as you did the internet will be a lot more useful than ever before